You are currently browsing the tag archive for the ‘psychology’ tag.

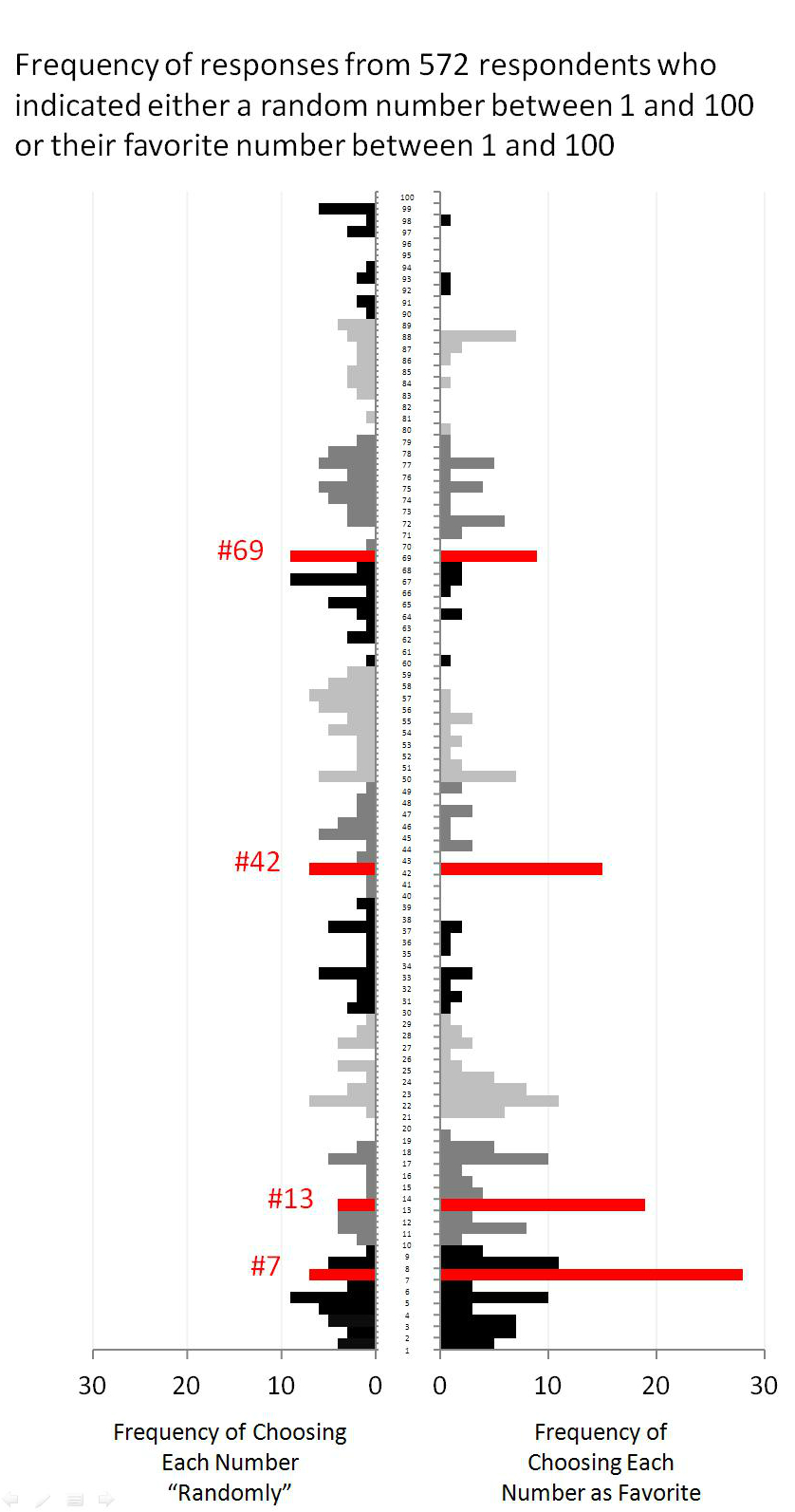

Some people were asked to name their favorite number, others were asked to give a random number:

More here. Via Justin Wolfers.

Matthew Rabin was here last week presenting his work with Erik Eyster about social learning. The most memorable theme of their their papers is what they call “anti-imitation.” It’s the subtle incentive to do the opposite of someone in your social network even if you have the same preferences and there are no direct strategic effects.

You are probably familiar with the usual herding logic. People in your social network have private information about the relative payoff of various actions. You see their actions but not their information. If their action reveals they have strong information in favor of it you should copy them even if you have private information that suggests doing the opposite.

Most people who know this logic probably equate social learning with imitation and eventual herding. But Eyster and Rabin show that the same social learning logic very often prescribes doing the opposite of people in your social network. Here is a simple intuition. Start with a different, but simpler problem. Suppose that your friend makes an investment and his level of investment reveals how optimistic he is. His level of optimism is determined by two things, his prior belief and any private information he received.

You don’t care about his prior, it doesn’t convey any information that’s useful to you but you do want to know what information he got. The problem is the prior and the information are entangled together and just by observing his investment you can’t tease out whether he is optimistic because he was optimistic a priori or because he got some bullish information.

Notice that if somebody comes and tells you that his prior was very bullish this will lead you to downgrade your own level of optimism. Because holding his final beliefs fixed, the more optimistic was his prior the less optimistic must have been his new information and its that new information that matters for your beliefs. You want to do the opposite of his prior.

This is the basic force behind anti-imitation. (By the way I found it interesting that the English language doesn’t seem to have a handy non-prefixed word that means “doing the opposite of.”) Suppose now your friend got his prior beliefs from observing his friend. And now you see not only your friend’s investment level but his friend’s too. You have an incentive to do the opposite of his friend for exactly the same reason as above.

This assumes his friend’s action conveys no information of direct relevance for your own decision. And that leads to the prelim question. Consider a standard herding model where agents move in sequence first observing a private signal and then acting. But add the following twist. Each agent’s signal is relevant only for his action and the action of the very next agent in line. Agent 3 is like you in the example above. He wants to anti-imitate agent 1. But what about agents 4,5,6, etc?

If you are like me and you believe that thinking is better path to success than not thinking, its hard not to take it personally when an athlete or other performer who is choking is said to be “overthinking it.” He needs to get “untracked.” And if he does and reaches peak performance he is said to be “unconscious.”

There are experiments that seem to confirm the idea that too much thinking harms performance. But here’s a model in which thinking always improves performance and which is still consistent with the empirical observation that thinking is negatively correlated with performance.

In any activity we rely on two systems: one which is conscious, deliberative and requires “thinking.” The other is instinctive. Using the deliberative system always gives better results but the deliberation requires the scarce resource of our moment-to-moment attention. So for any sufficiently complex activity we have to ration the limited capacity of the deliberative system and offload many aspects of performance to pre-programmed instincts.

But for most activities we are not born with an instinctive knowledge how to do it. What we call “training” is endless rehearsal of an activity which establishes that instinct. With enough training, when circumstances demand we can offload the activity to the instinctive system in order to conserve precious deliberation for whatever novelties we are facing which truly require original thinking.

An athlete or performer who has been unsettled, unnerved, or otherwise knocked out of his rhythm finds that his instinctive system is failing him. The wind is playing tricks with his toss and so his serve is falling apart. Fortunately for him he can start focusing his attention on his toss and his serve and this will help. He will serve better as a result of overthinking his serve.

But there is no free lunch. The shock to his performance has required him to allocate more than usual of his deliberative resources to his serve and therefore he has less available for other things. He is overthinking his serve and as a result his overall performance must suffer.

(Conversation with Scott Ogawa.)

Here is a nice essay on the idea that “over thinking” causes choking. It begins with this study:

A classic study by Timothy Wilson and Jonathan Schooler is frequently cited in support of the notion that experts, when performing at their best, act intuitively and automatically and don’t think about what they are doing as they are doing it, but just do it. The study divided subjects, who were college students, into two groups. In both groups, participants were asked to rank five brands of jam from best to worst. In one group they were asked to also explain their reasons for their rankings. The group whose sole task was to rank the jams ended up with fairly consistent judgments both among themselves and in comparison with the judgments of expert food tasters, as recorded in Consumer Reports. The rankings of the other group, however, went haywire, with subjects’ preferences neither in line with one another’s nor in line with the preferences of the experts. Why should this be? The researchers posit that when subjects explained their choices, they thought more about them.

Suppose you and a friend of the opposite sex are recruited for an experiment. You are brought into separate rooms and told that you will be asked some questions and, unless you give consent, all of your answers will be kept secret.

First you are asked whether you would like to hook up with your friend. Then you are asked whether you believe your friend would like to hook up with you. These are just setup questions. Now come the important ones. Assuming your friend would like to hook up with you, would you like to know that? Assuming your friend is not interested, would you like to know that? And would you like your friend to know that you know?

Assuming your friend is interested, would you like your friend to know whether you are interested? Assuming your friend is not interested, same question. And the higher-order question as well.

These questions are eliciting your preferences over you and your friend’s beliefs about (beliefs about…) you and your friend’s preferences. This is one context where the value of information is not just instrumental (i.e. it helps you make better decisions) but truly intrinsic. For example I would guess that for most people, if they are interested and they know that the other is not that they would strictly prefer that the other not know that they are interested. Because that would be embarrassing.

And I bet that if you are not interested and you know that the other is interested you would not like the other to know that you know that she is interested. Because that would be awkward.

Notice in fact that there is often a strict preference for less information. And that’s what makes the design of a matching mechanism complicated. Because in order to find matches (i.e. discover and reveal mutual interest) you must commit to reveal the good news. In other words, if you and your friend both inform the experimenters that you are interested and that you want the other to know that, then in order to capitalize on the opportunity the information must be revealed.

But any mechanism which reveals the good news unavoidably reveals some bad news precisely when the good news is not forthcoming. If you are interested and you want to know when she is interested and you expect that whenever she is indeed interested you will get your wish, then when you don’t get your wish you find out that she is not interested.

Fortunately though there is a way to minimize the embarrassment. The following simple mechanism does pretty well. Both friends tell the mediator whether they are interested. If, and only if, both are interested the mediator informs both that there is a mutual interest. Now when you get the bad news you know that she has learned nothing about your interest. So you are not embarrassed.

However it doesn’t completely get rid of the awkwardness. When she is not interested she knows that *if* you are interested you have learned that she is not interested. Now she doesn’t know that this state of affairs has occurred for sure. She thinks it has occurred if and only if you are interested so she thinks it has occurred with some moderate probability. So it is moderately awkward. And indeed you know that she is not interested and therefore feels moderately awkward.

The theoretical questions are these: under what specification of preferences over higher-order beliefs over preferences is the above mechanism optimal? Is there some natural specification of those preferences in which some other mechanism does better?

Update: Ran Spiegler points me to this related paper.

A balanced take in the New Yorker. Here is an excerpt.

A core objection is that neuroscientific “explanations” of behavior often simply re-state what’s already obvious. Neuro-enthusiasts are always declaring that an MRI of the brain in action demonstrates that some mental state is not just happening but is really, truly, is-so happening. We’ll be informed, say, that when a teen-age boy leafs through the Sports Illustrated swimsuit issue areas in his brain associated with sexual desire light up. Yet asserting that an emotion is really real because you can somehow see it happening in the brain adds nothing to our understanding. Any thought, from Kiss the baby! to Kill the Jews!, must havesome route around the brain. If you couldn’t locate the emotion, or watch it light up in your brain, you’d still be feeling it. Just because you can’t see it doesn’t mean you don’t have it. Satel and Lilienfeld like the term “neuroredundancy” to “denote things we already knew without brain scanning,” mockingly citing a researcher who insists that “brain imaging tells us that post-traumatic stress disorder (PTSD) is a ‘real disorder.’ ”

And

It’s perfectly possible, in other words, to have an explanation that is at once trivial and profound, depending on what kind of question you’re asking. The strength of neuroscience, Churchland suggests, lies not so much in what it explains as in the older explanations it dissolves. She gives a lovely example of the panic that we feel in dreams when our legs refuse to move as we flee the monster. This turns out to be a straightforward neurological phenomenon: when we’re asleep, we turn off our motor controls, but when we dream we still send out signals to them. We really are trying to run, and can’t.

We were interviewed by the excellent Jessica Love for Kellogg Insight. Its about 12 minutes long. Here’s one bit I liked:

We go around in our lives and we collect information about what we should do, what we should believe and really all that matters after we collect that information is the beliefs that we derive from them and its hard to keep track of all the things we learn in our lives and most of them are irrelevant once we have accounted for them in our beliefs, the particular pieces of information we can forget as long as we remember what beliefs we should have. And so a lot of times what we are left with after this is done are beliefs that we feel very strongly about and someone comes and interrogates us about what’s the basis of our beliefs and we can’t really explain it and we probably can’t convince them and they say, well you have these irrational beliefs. But its really just an optimization that we’re doing, collecting information, forming our beliefs and then saving our precious memory by discarding all of the details.

I wish I could formalize that.

One form of mental accounting is where you give yourself separate budgets for things like food, entertainment, gas, etc. It’s suboptimal because these separate budgets make you less flexible in your consumption plans. For example in a month where there are many attractive entertainment offerings, you are unable to reallocate spending away from other goods in favor of entertainment.

But it could be understood as a second-best solution when you have memory limitations. Suppose that when you decide how much to spend on groceries, you often forget or even fail to think of how much you have been spending on gas this month. If so, then its not really possible to be as flexible as you would be in the first-best because there’s no way to reduce your grocery expenditures in tandem with the increased spending on gas.

That means that you should not increase your spending on gas. In other words you should stick to a fixed gas budget.

Now memory is associative, i.e. current experiences stimulate memories of related experiences. This can give some structure to the theory. It makes sense to have a budget for entertainment overall rather than separate budgets for movies and concerts because when you are thinking of one you are likely to recall your spending on the other. So the boundaries of budget categories should be determined by an optimal grouping of expenditures based on how closely associated they are in memory.

(Discussion with Asher Wolinsky and Simone Galperti)

Oliver Sacks on the social costs of plagiarism stigma:

Helen Keller was accused of plagiarism when she was only twelve.2 Though deaf and blind from an early age, and indeed languageless before she met Annie Sullivan at the age of six, she became a prolific writer once she learned finger spelling and Braille. As a girl, she had written, among other things, a story called “The Frost King,” which she gave to a friend as a birthday gift. When the story found its way into print in a magazine, readers soon realized that it bore great similarities to “The Frost Fairies,” a children’s short story by Margaret Canby. Admiration for Keller now turned into accusation, and Helen was accused of plagiarism and deliberate falsehood, even though she said that she had no recollection of reading Canby’s story, and thought she had made it up herself. The young Helen was subjected to a ruthless inquisition, which left its mark on her for the rest of her life.

There is a subtle defense of plagiarism in the connection he draws with false memories, and the value of ignoring the source.

Indifference to source allows us to assimilate what we read, what we are told, what others say and think and write and paint, as intensely and richly as if they were primary experiences. It allows us to see and hear with other eyes and ears, to enter into other minds, to assimilate the art and science and religion of the whole culture, to enter into and contribute to the common mind, the general commonwealth of knowledge. This sort of sharing and participation, this communion, would not be possible if all our knowledge, our memories, were tagged and identified, seen as private, exclusively ours. Memory is dialogic and arises not only from direct experience but from the intercourse of many minds.

Kepi kiss: David Olson.

In our paper, Alex Frankel, Emir Kamenica and I argue that soccer is among the most suspenseful sports according to our theoretical measure. Now, via Matt Dickenson, comes an empirical validation of this finding using German cardiac arrest data:

The red line shows the spike in heart attacks on the dates of 2006 World Cup matches involving the German national team. Note that point 7 is the third place match against Portugal after Germany had been eliminated in their semi-final match against Italy (point 6.)

Many laws that restrict freedoms are effectively substitutes for private contracts. In a frictionless world we wouldn’t need those laws because every subset of individuals could sign private contracts to decide efficiently what the laws decide bluntly and uniformly. But given transaction costs and bargaining inefficiencies those blunt laws are the best we can do.

Some people might want to sign contracts that constrain themselves. For example I might know that I am tempted to drink too many Big Gulps and I might want to contract with every potential supplier of large sugary drinks, getting them to agree never to sell them to me even if I ask for it. But this kind of contract is plagued not only by the transaction costs and bargaining inefficiencies that justify many existing planks in the social contract, but in addition a new friction: these contracts are simply not enforceable.

Because even with such a contract in place, when I actually am tempted to buy a Slurpee, it will be in the interest of both me and my Slurpee supplier to nullify the contract. (It doesn’t solve the problem to structure the contract so that I have to pay 7-11 if I buy a Slurpee from them. If that contract works then I don’t buy the Slurpee and 7-11 would be willing to agree to sign a second contract that nullifies the first one in order to sell me a Slurpee.)

These considerations alone don’t imply that it would be socially efficient to substitute a blanket ban on large sugary drinks for the unenforceable contracts. But what they do imply is that it would be efficient for the courts to recognize such a ban if a large enough segment of the population wants it. (And this is no way intended to suggest that one Michael Bloomberg by himself constitutes a large enough segment of the population.)

Since you’ve only ever been you and you’ve had to listen to yourself talk about your own thoughts for all this time, by now you are bored of yourself. Your thoughts and acts seem so trite compared to everyone else so instead you try to be like them. But you fail to account for the fact that to everybody else you are pretty much brand new and indeed you would be a refreshing break from their boring selves if only you would just be you.

This is an interesting article, albeit breathless and probably completely deluded, about acquired savantism: people suffering traumatic brain injury and as a result developing a talent that they did not have before. Here’s at least one bit that sounds legit:

Last spring, Snyder published what many consider to be his most substantive work. He and his colleagues gave 28 volunteers a geometric puzzle that has stumped laboratory subjects for more than 50 years. The challenge: Connect nine dots, arrayed in three rows of three, using four straight lines without retracing a line or lifting the pen. None of the subjects could solve the problem. Then Snyder and his colleagues used a technique called transcranial direct current stimulation (tDCS) to temporarily immobilize the same area of the brain destroyed by dementia in Miller’s acquired savants. The noninvasive technique, which is commonly used to evaluate brain damage in stroke patients, delivers a weak electrical current to the scalp through electrodes, depolarizing or hyperpolarizing neural circuits until they have slowed to a crawl. After tDCS, more than 40 percent of the participants in Snyder’s experiment solved the problem. (None of those in a control group given placebo tDCS identified the solution.)

(I know this problem because it was presented to us as a brain teaser when I was in 2nd grade. Nobody got it. The solution while quite simple is “difficult” to see because you instinctively self-impose the unstated rule that your pencil cannot leave the square.)

The suggestion is that with some drugs or surgery we could all unlock some hidden sensitivity or creativity that is latent within us. Forget about whether any of the anecdotes in the article are true examples of the phenomenon (the piano guy almost certainly is not. Watch the video, he’s doing what anyone with some concentrated practice can do. There is no evidence that he has acquired a natural, untrained facility at the piano. And anyway even if we accept the hypothesis about brain damage and perception/concentration why should we believe that a blow to the head can give you a physical ability that normally takes months or years of exercise to acquire?)

The examples aside, there is reason to believe that something like this could be possible. At least the natural counterargument is wrong: our brains should already be using whatever talents they have to their fullest. It would be an evolutionary waste to build the structure to do something useful and not actually use it. This argument is wrong but not because playing the piano and sculpting bronze bulls are not valuable survival skills. Neither is Soduku but we have that skill because its one way to apply a deeper, portable skill that can also be usefully applied. No, the argument is wrong because it ignores the second-best nature of the evolutionary optimum.

It could be that we have a system that takes in tons of sensory information all of which is potentially available to us at a conscious level but in practice is finely filtered for just the most relevant details. While the optimal level of detail might vary with the circumstances the fineness of the filter could have been selected for the average case. That’s the second best optimum if it is too complex a problem to vary the level of detail according to circumstances. If so, then artificial intervention could improve on the second-best by suppressing the filter at chosen times.

A fascinating article I found after digging through Conor Friedersdorf’s best of journalism.

What distinguishes a great mnemonist, I learned, is the ability to create lavish images on the fly, to paint in the mind a scene so unlike any other it cannot be forgotten. And to do it quickly. Many competitive mnemonists argue that their skills are less a feat of memory than of creativity. For example, one of the most popular techniques used to memorize playing cards involves associating every card with an image of a celebrity performing some sort of a ludicrous — and therefore memorable — action on a mundane object. When it comes time to remember the order of a series of cards, those memorized images are shuffled and recombined to form new and unforgettable scenes in the mind’s eye. Using this technique, Ed Cooke showed me how an entire deck can be quickly transformed into a comically surreal, and unforgettable, memory palace.

The author documents his training as a mental athlete and his US record breaking performance memorizing a deck of cards in 1 minute 40 seconds. I personally have a terrible memory, especially for names, but I don’t think this kind of active memorization is especially productive. The kind of memory enhancement we could all benefit from is the ability to call up more and more ideas/thoughts/experiences related to whatever is currently going on. We need more fluid relational memory, RAM not so much.

People don’t like to be idle. They are willing to spend energy on pointless activities just to avoid idleness. But they are especially willing to do that if they can make up fake reasons to justify the unproductive busyness. That’s the conclusion from a clever experiment Emir Kamenica told me about.

In this experiment subjects who had just completed a survey were told they had to deliver the survey to one of two locations before being presented with the next survey 15 minutes later. They could walk and deliver to a faraway location, about a 15 minute roundtrip, or deliver it nearby, in which case they would have to stand around for the remainder of the 15 minutes.

There was candy waiting for them at the delivery point. In a benchmark treatment it was the same candy at each location. In that treatment the majority of subjects opted for the short walk and idleness.

In a second treatment two different, but equally attractive (experimentally verified) types of candy were available at the two locations and the subjects were told this. In this treatment the majority of subjects walked the long distance.

The researchers conclude that the subjects wanted to avoid idleness and rationalized the effort spent by convincing themselves that they were getting the better reward. Indeed the subjects who traveled far reported greater happiness than their idle counterparts regardless of what candy was available.

Comes from being able to infer that since by now you have not found any clear reason to favor one choice over the other it means that you are close to indifferent and you should pick now, even randomly.

Ever seen this? There’s a panel of experts and every couple weeks they are presented with a policy statement like “The debt ceiling creates too much uncertainty” and each one has to say individually whether they agree or disagree.

Randomize whether you put the statement in the affirmative or the negative: “The debt ceiling creates too much uncertainty” versus “The debt ceiling doesn’t create too much uncertainty”, and see how that affects how that affects which experts agree or disagree.

It’s the opposite of deduction. We didn’t sit down and try to figure something out.

It’s as if the computation happened so deep within our head, like all this time the basic facts were already there and in our subconscious minds they are stirring around until by chance one set of facts come together that happen to imply something new and the reaction creates an explosion big enough to get the attention of our conscious mind.

And at a conscious level we say “aha! I instinctively know something is true. But why?” And here’s where the 99% perspiration comes. We have to go back to find out why it’s true.

Ok sometimes we discover we were wrong. Other times we discover conclusively why we were right.

But almost always we can’t decide conclusively one way it another but we find reasons for what we believe.

And we believe what we believe. Rationally. We felt it instinctively. And that belief, that sensation is a thing. Its a signal. That we had that inspiration serves as information to us. We have faith in our beliefs.

To lack such faith would be to invite the padded cell.

So that’s another piece of evidence to go along with the facts. And it just might, again perfectly rationally, tip the balance.

- When you turn a bottle over to pour out its contents it is less messy if you do the tilt thing to make sure there is a space for air to flow back into the bottle. But which way of pouring is faster if you just want to dump it out in the shortest time possible? I think the tilt can never be as fast.

- I aspire to hit for the cycle: publish in all the top 5 economics journals. But it would be a lifetime cycle. Has anyone ever hit for the cycle in a single year?

- If you know you’ll get over it eventually shouldn’t you be over it now? And if not should you really get over it later?

- The efficient markets hypothesis means that there is no trading strategy that consistently loses money. (Because if there were then the negative of that strategy would consistently make a profit.) So trade with abandon!

- I predict that in the future the distinct meanings of the prepositions “in” and “on” will progressively blur because of mobile phone typos.

Drawing: Scatterbrained from www.f1me.net (yaaay she’s back.)

One reason people over-react to information is that they fail to recognize that the new information is redundant. If two friends tell you they’ve heard great things about a new restaurant in town it matters whether those are two independent sources of information or really just one. It may be that they both heard it from the same source, a recent restaurant review in the newspaper. When you neglect to account for redundancies in your information you become more confident in your beliefs than is justified.

This kind of problem gets worse and worse when the social network becomes more connected because its ever more likely that your two friends have mutual friends.

And it can explain an anomaly of psychology: polarization. Sandeep in his paper with Peter Klibanoff and Eran Hanany give a good example of polarization.

A number of voters are in a television studio before a U.S. Presidential debate. They are asked the likelihood that the Democratic candidate will cut the budget deficit, as he claims. Some think it is likely and others unlikely. The voters are asked the same question again after the debate. They become even more convinced that their initial inclination is correct.

It’s inconsistent with Bayesian information processing for groups who observe the same information to systematically move their beliefs in opposite directions. But polarization is not just the observation that the beliefs move in opposite directions. It’s that the information accentuates the original disagreement rather than reducing it. The groups move in the same opposite directions that caused their disagreement originally.

Here’s a simple explanation for it that as far as I know is a new one: the voters fail to recognize that the debate is not generating any new information relative to what they already knew.

Prior to the debate the voters had seen the candidate speaking and heard his view on the issue. Even if these voters had no bias ex ante, their differential reaction to this pre-debate information separates the voters into two groups according to whether they believe the candidate will cut the deficit or not.

Now when they see the debate they are seeing the same redundant information again. If they recognized that the information was redundant they would not move at all. But if don’t then they are all going to react to the debate in the same way they reacted to the original pre-debate information. Each will become more confident in his beliefs. As a result they will polarize even further.

Note that an implication of this theory is that whenever a common piece of information causes two people to revise their beliefs in opposite directions it must be to increase polarization, not reduce it.

Free as in liberated. Here’s the opening paragraph:

I wrote this paper with the recognition that it is unlikely to be accepted for publication. There is something liberating about writing a paper without trying to please referees and without having to take into consideration the various protocols and conventions imposed on researchers in experimental economics (see Rubinstein (2001)). It gives one a feeling of real academic freedom!

The paper reports on long-running experiments relating response times to mistakes in decision-making.

With social networking you are now exposed to so many different voices in rapid succession. Each one is monotonous as an individual but individual voices arrive too infrequently for you notice that, all you see is the endless variety of people saying and thinking things that you can never think or say. It seems like the everyone in the world is more creative than one-dimensional you.

SOME years ago, executives at a Houston airport faced a troubling customer-relations issue. Passengers were lodging an inordinate number of complaints about the long waits at baggage claim. In response, the executives increased the number of baggage handlers working that shift. The plan worked: the average wait fell to eight minutes, well within industry benchmarks. But the complaints persisted.

Puzzled, the airport executives undertook a more careful, on-site analysis. They found that it took passengers a minute to walk from their arrival gates to baggage claim and seven more minutes to get their bags. Roughly 88 percent of their time, in other words, was spent standing around waiting for their bags.

So the airport decided on a new approach: instead of reducing wait times, it moved the arrival gates away from the main terminal and routed bags to the outermost carousel. Passengers now had to walk six times longer to get their bags. Complaints dropped to near zero.

The final seconds are ticking off the clock and the opposing team is lining up to kick a game winning field goal. There is no time for another play so the game is on the kicker’s foot. You have a timeout to use.

Calling the timeout causes the kicker to stand around for another minute pondering his fateful task. They call it “icing” the kicker because the common perception is that the extra time in the spotlight and the extra time to think about it will increase the chance that he chokes. On the other hand you might think that the extra time only works in the kickers favor. After all, up to this point he wasn’t sure if or when he was going to take the field and what distance he would be trying for. The timeout gives him a chance to line up the kick and mentally prepare.

What do the data say? According to this article in the Wall Street Journal, icing the kicker has almost no effect and if anything only backfires. Among all field goal attempts taken since the 2000 season when there were less than 2 minutes remaining, kickers made 77.3% of them when there was no timeout called and 79.7% when the kicker was “iced.”

So much for icing? No! Icing the kicker is a successful strategy because it keeps the kicker guessing as to when he will actually have to prepare himself to perform. The optimal use of the strategy is to randomize the decision whether to call a timeout in order to maximize uncertainty. We’ve all seen kickers, golfers, players of any type of finesse sport mentally and physically prepare themselves for a one-off performance. The mental focus required is a scarce resource. Randomizing the decision to ice the kicker forces the kicker to choose how to ration this resource between two potential moments when he will have to step up.

If you ice with probability zero he knows to focus all his attention when he first takes the field. If you ice with probability 1 he knows to save it all for the timeout. The optimal icing probability leaves him indifferent between allocating the marginal capacity of attention between the two moments and minimizes his overall probability of a successful field goal. (The overall probability is the probability of icing times the success probability conditional on icing plus the probability of not icing times the success probability conditional on icing.)

Indeed the simplest model would imply that the optimal icing strategy equalizes the kicker’s success probability conditional on icing and conditional on no icing. So the statistics quoted in the WSJ article are perfectly consistent with icing as part of an optimal strategy, properly understood.

But whatever you do, call the timeout before he gets a freebie practice kick.

From the blog (?) notes.unwieldy.net:

The average New York City taxi cab driver makes $90,747 in revenue per year. There are roughly 13,267 cabs in the city. In 2007, NYC forced cab drivers to begin taking credit cards, which involved installing a touch screen system for payment.

During payment, the user is presented with three default buttons for tipping: 20%, 25%, and 30%. When cabs were cash only, the average tip was roughly 10%. After the introduction of this system, the tip percentage jumped to 22%.

He calculates that the tip nudge increased cab revenues by $144,146,165 per year.

Kunz was a sociologist at Brigham Young University. Earlier that year he’d decided to do an experiment to see what would happen if he sent Christmas cards to total strangers.

And so he went out and collected directories for some nearby towns and picked out around 600 names. “I started out at a random number and then skipped so many and got to the next one,” he says.

To these 600 strangers, Kunz sent his Christmas greetings: handwritten notes or a card with a photo of him and his family. And then Kunz waited to see what would happen.

But about five days later, responses started filtering back — slowly at first and then more, until eventually they were coming 12, 15 at a time. Eventually Kunz got more than 200 replies. “I was really surprised by how many responses there were,” he says. “And I was surprised by the number of letters that were written, some of them three, four pages long.”

The premise of this article is that people feel compelled to reciprocate your generosity. And once you know that, you can take advantage of it.

Exhibit A: those little pre-printed address labels that come to us in the mail this time of year along with letters asking for donations.

Those labels seem innocent enough, but they often trigger a small but very real dilemma. “I can’t send it back to them because it’s got my name on it,” Cialdini says. “But as soon as I’ve decided to keep that packet of labels, I’m in the jaws of the rule.”

The packet of labels costs roughly 9 cents, Cialdini says, but it dramatically increases the number of people who give to the charities that send them. “The hit rate goes from 18 to 35 percent,” he says. In other words, the number of people who donate almost doubles.

- To indirectly find out what a person of the opposite sex thinks of her/himself ask what she thinks are the big differences between men and women.

- Letters of recommendation usually exaggerate the quality of the candidate but writers can only bring themselves to go so far. To get extra mileage try phrases like “he’s great, if not outstanding” and hope that its understood as “he’s great, maybe even outstanding” when what you really mean is “he’s not outstanding, just great.”

- In chess, kids are taught never to move a piece twice in the opening. This is a clear sunk cost fallacy.

- I remember hearing that numerals are base 10 because we have 10 fingers. But then why is music (probably more primitive than numerals) counted mostly in fours?

- “Loss aversion” is a dumb terminology. At least risk aversion means something: you can be either risk averse or risk loving. Who likes losses?

Remember how baffled Kasparov was about Deep Blue’s play in their famous match? It gets interesting.

Earlier this year, IBM celebrated the 15-year anniversary of its supercomputer Deep Blue beating chess champion Garry Kasparov. According to a new book, however, it may have been an accidental glitch rather than computing firepower that gave Deep Blue the win.

At the Washington Post, Brad Plumer highlights a passage from Nate Silver’s The Signal and the Noise. Silver interviewed Murray Campbell, a computer scientist that worked on Deep Blue, who explained that during the 1997 tournament the supercomputer suffered from a bug in the first game. Unable to pick a strategic move because of the glitch, it resorted to its fall-back mechanism: choosing a play at random. “A bug occurred in the game and it may have made Kasparov misunderstand the capabilities of Deep Blue,” Campbell tells Silver in the book. “He didn’t come up with the theory that the move it played was a bug.”

As Silver explains it, Kasparov may have taken his own inability to understand the logic of Deep Blue’s buggy move as a sign of the computer’s superiority. Sure enough, Kasparov began having difficulty in the second game of the tournament — and Deep Blue ended up winning in the end.

Visor volley: Mallesh Pai.

Suppose that what makes a person happy is when their fortunes exceed expectations by a discrete amount (and that falling short of expectations is what makes you unhappy.) Then simply because of convergence of expectations:

- People will have few really happy phases in their lives.

- Indeed even if you lived forever you would have only finitely many spells of happiness.

- Most of the happy moments will come when you are young.

- Happiness will be short-lived.

- The biggest cross-sectional variance in happiness will be among the young.

- When expectations adjust to the rate at which your fortunes improve, chasing further happiness requires improving your fortunes at an accelerating rate.

- If life expectancy is increasing and we simply extrapolate expectations into later stages of life we are likely to be increasingly depressed when we are old.

- There could easily be an inverse relationship between intelligence and happiness.