You are currently browsing jeff’s articles.

In the bottom of the 8th inning of tonight’s game 3 of the NLDS, Anthony Rizzo blooped a single to shallow left and Leonys Martin scored what proved to be the winning run. The noteworthy thing is that Martin scored from second base on a ball that was so shallow it was playable by the shortstop.

The reason he was able to score from second is that there were two outs and he was free to run without worrying about potentially tagging if the ball was caught. So this raises an interesting question: was this a situation in which the Cubs were better off with two outs (as opposed to one or zero)?

Hint: neither answer is cut and dry correct but I do claim there is a right answer.

You are in a negotiation and you are offered X. You really want both X and Y but you have just been given the option to take X now and then continue negotiating for Y.

In this story you are the House of Representatives, X is repeal of the mandate and Y is rollback of Medicaid. You have been offered X in the form of the “skinny repeal”

Will you take X? That is, will the House just pass the skinny bill as Lindsey Graham and John McCain worry they might?

Well, its quite likely they will not. But this shouldn’t make the Senators any less worried. Because the House always has the *option* of passing the skinny bill, whatever they do finally agree to in the conference committee will have to be at least as good for the House majority as what they could take immediately.

In other words X+Y, which by the way if you do the math equals BRCA which Senate Republicans already rejected.

A one player extensive-form game with perfect information. The player’s name is Zeno. The game has an infinite horizon. For every non-negative integer N there is a node at which Zeno chooses either to Continue or Quit. If he Quits at node N his payoff is -N/(N+1). If he continues he moves on to node N+1.

There is also a terminal node at infinity. This node is reached if and only if Zeno Continues at all finite nodes. The payoff at infinity is 1.

Here is the story: Zeno stands at the starting line of a Marathon. After running the distance he decides whether to quit, etc. His goal is to finish the Marathon and run into the arms of his proud and adoring fans. If he doesn’t get to the finish line (the node at infinity) then all he has done is make himself tired with no compensating adoration. The farther he runs before quitting the more tired he is.

The game has a unique subgame perfect equilibrium: Zeno completes the Marathon. But it has another strategy which is unimprovable by a one-stage deviation: Zeno quits at every opportunity.

This latter strategy has a nice behavioral interpretation. Zeno lacks confidence in his determination to complete the Marathon. In particular he wants to complete it but he expects that if he runs another half of the distance he will wind up quitting once he gets there so why bother. And indeed the reason he knows he will quit after running half the distance is that he knows that when he gets there he will know that after running another half the distance he will still quit so why bother.

This is a great example for teaching the One Stage Deviation Principle, which asserts that strategies that are unimprovable are also SPE. The OSDP requires the game to be continuous at infinity. The Marathon game is not continuous at infinity.

To make it continuous at infinity, assume that Zeno’s fans will be almost as proud of him if he runs 26.1999 miles as they would be if he ran the remaining one-millionth of a mile. If so, then Zeno’s payoff from nearly completing the Marathon is positive and the Quitting strategy becomes improvable.

On Saturday The New York Times published the story that Donald Trump Jr together with Paul Manafort and Jared Kushner met in Trump Tower with a Russian Lawyer. That was basically the entire content of the story in terms of new information being reported. The rest of the story was recap of allegations about collusion together with some hint-hint dot connecting.

Junior responded by acknowledging the meeting and saying that it was about Russian adoptions.

The Times followed up on Sunday by reporting that this was in fact a lie, that Junior was promised information about Hillary Clinton that would be valuable for his campaign.

Junior acknowledged this, but minimized the significance of the meeting through a number of statements.

Finally the Times was set to publish accounts of an email thread that apparently showed that Trump was told in advance that this was an effort by the Russian government to provide information that would help his father get elected.

Before they could do that Junior published the actual emails himself.

Someone, either the Times’ source, or the Times journalists themselves had all of that information at the very beginning. And rather than do the normal journalistic thing of reporting what they knew they released it in little bits.

The first bit was barely meaningful to every reader in the world except for Trump, Manafort Kushner. It was a message that we have some information that you might not like and it was a challenge to find the right response.

Their dilemma was that first and foremost they don’t want to admit to have colluded with the Russian government. But, if that was going to be proven anyway they would rather not first lie about it and then have to admit it.

They tried lying. The Times released another little piece which did two things. First it proved they lied, and second it proved that they have more information than they had in their original story.

Now Junior and Co. had to guess just how much information. They guessed wrong. Indeed the Times had all of the information and in the end Junior was forced to admit what he could have admitted at the beginning and along the way he was caught in two lies. Indeed in the end he pre-empted the Times revelation by revealing everything himself.

This is a very clever tactic by journalists who are technically doing the journalistically-ethical thing of publishing only the truth, but being strategic about it by publishing in little pieces to maximize the damage, probably because the Trump administration has made them the enemy.

Note how truly powerful this tactic is once it has been deployed. The next time the Times has some but not all of the information that would prove guilt they will again release a little bit of it. Trump will have to guess again how much they are holding back. Trump will have to trade off the probability of being caught in another lie (if The Times truly has all the dirt) versus credible denial (if they don’t). If used optimally, this bluffing tactic can get the subject to pre-emptively confess (i.e. to fold to extent the poker analogy) even when the damaging evidence isn’t there.

(One could write a simple dynamic Bayesian persuasion model to calculate the Times’ optimal probability of bluffing in order to maximize the probability that the subject’s best-response is to pre-emptively confess.)

Everyone who has armchair-theorized why movie theaters don’t sell assigned seats in advance is now obligated to explain why this has changed and how that’s consistent with their model.

I will start. My theory was based on the value of advertising to movie-goers who must arrive early to get preferred seats and then are a captive audience. This has become significantly less valuable now that said movie-goers can bring their own screens and be captive to some other advertiser.

Indeed today at the movies we arrived on time to our assigned seat, there were no ads and just a few previews. (Spoiler alert: the movie with Stringer Bell and the Titanic girl is going to suck, Bladerunner is going to be great and Wonder Woman was awesome for the first and last 10 minutes but apart from that was basically Splash meets Hot Lead and Cold Feet.)

- One reason time seems to go faster when you are older. “Has it already been a year?” You have so many more things going on and only a few of them can reside in working memory. When some milestone brings a buried one back to the surface it feels like time has passed more quickly than if it were on your mind the whole time.

- A 50 meter tall swimmer would win every race. Generally taller swimmers have less ground to cover and so the distance is shorter for them. Is there any kind of race other than swimming like that?

- If you have your brain cut into two parts so that one side is unaware of what the other is doing can you tickle yourself?

The anticlimax that is/will be the Comey testimony proves something I have always thought about skeletons in the closet. They should be released early when not everyone is paying attention.

The premise is that outrage is something that needs to be coordinated. Its not enough for everyone to feel outraged. Its not even enough for everyone to know everyone is feeling outraged. The feeling of outrage has to be common knowledge. Only that way enough people know that when they act on their outrage there will be enough others acting on their outrage for it to be a movement that can have impact. (Outrage is a scarce resource.)

So skeletons released sometime in the past when nobody was actually looking for skeletons is a way to dampen that coordination. Because then when the time comes and people are actually focused on you and any skeletons you might have, the old already-uncloseted skeletons can’t have the same impact as a brand new one released right now when everyone sees it for the first time. They are “old news” (and its no wonder that’s a go-to line of defense against resurrected skeletons.) Strategically everyone infers that since the skeletons didn’t cause outrage at the time they must not be that outrageous to that many people and so they are not a call to outrage now.

So despite the several outrageous things in the Comey statement, they are all old news and that feeling of anti-climax is a symptom of the above logic. If Comey had not previewed his testimony in the preceding weeks but instead dropped it as one brand new bombshell on TV in front of a Super Bowl audience (paging Michael Chwe) the same skeletons would cause significantly more outrage.

An interesting corollary is that leaks are actually Trump’s ally. Leaking the scandal little by little through varied and segregated media channels is a way of getting the skeletons out with minimal impact well in advance of any chance of outrage.

(There’s a new paper by Gratton, Holden and Kolotilin that also looks at bombshells but I think the argument is a little different.)

- Watch for the key leading indicator of impending Presidential downfall: the Vice President going silent. We will reach a point where the VP’s objective switches from defending the President to preserving his own viability as President. Right around that turning point he will try his best to avoid having to comment.

- That means that before reaching that point, Democrats will do their very best to get him to comment while they still have a chance. Knowing this of course makes the VP want to hide even sooner, etc. The unraveling may have already reached back to the present moment.

- At some point the VP himself becomes a threat to the President because Republicans in Congress will try to find the smoothest path to a fresh Presidency with the possibility of delivering on their agenda intact. This will involve coordination with the VP.

- That means the President himself will try to get the VP to publicly support him in order to ensure that the VP is tarred with the same brush, removing the above branch of the tree and maintaining the threat of a messy impeachment proceeding that congressional Republicans will want to avoid. Of course the first sign that the President needs the VP is the first signal to the VP that his best respond is to run far away, accelerating the unraveling further.

- This implies another leading indicator of impending doom. There will come a time when its the GOP that wants to hasten the proceedings and the Democrats will try to slow it down.

- Trump of course is the major wild-card (sic) in all of this. He has no loyalty to anybody and he has a massive megaphone whether he is in office our out. There is the prospect of Trump busting out tapes of conversations with GOP leaders expressing support. There is the possibility that he publicly turns on Pence before actually being removed from office.

- And of course the GOP is by no means out of the water once Trump is gone. He will be tweeting. He will have his “tapes”. He could even run again.

This paper by Finkelstein, Hendren, and Shepard finds that the uninsured have such a low willingness to pay for health insurance that they wouldn’t even cover the costs they impose on insurers:

But for our entire in-sample distribution – which spans the 6th to the 70th percentile of the WTP distribution – the WTP of marginal enrollees still lies far below their own expected costs imposed on insurers for either the H or L plans. For example, a median WTP individual imposes a cost of $340 on the insurer for the H plan, but is willing to pay only about $100 for the H plan. This suggests that textbook subsidies offsetting adverse selection would be insufficient for generating take-up for at least 70% of this low-income population. Coverage is low not simply because of adverse selection but because people are not willing to pay their own cost they impose on the insurer.

Large subsidies would be required to achieve universal coverage. And this is not correcting a market failure, its paying for something that the uninsured are revealing it would be inefficient to provide.

Except when you look at the likely reason.

Individuals choosing to forego health insurance exercise the ability to utilize uncompensated care; this externality from insurance choice raises a potential Samaritan’s dilemma rationale (Buchanan, 1975) for providing health insurance subsidies by using government taxes to internalize the externality imposed on the providers of uncompensated care when individuals choose to remain uninsured.

They are going to emergency rooms, receiving charity, etc. The low revealed willingness to pay comes from the fact that that formal health insurance would crowd out the informal health insurance they are receiving for “free”. But of course the cost is borne by somebody and should be added to the welfare calculation.

Perhaps we should stop thinking of health insurance as solving a traditional market failure but instead think of it in the same terms as flood insurance. Flood insurance is federally mandated for residences in flood plains. The logic is very simple. When there is a flood, those affected will receive assistance whether they have insurance or not. Therefore the only way to get them to internalize any of these costs is to require them to pay in advance, in the form of flood insurance.

When politicians argue against health insurance mandates because they take freedoms away ask them to argue against mandatory flood insurance on the same terms.

Beanie bob: MR

Yesterday just before the start of a seminar I went to the thermostat to try to warm up the room. The speaker saw me fiddling with it and nodded with approval. I said nothing, turned up the heat and returned to my seat.

The thing is, she couldn’t see whether I was raising the temperature or lowering it. She just assumed that I would be moving the temperature in the same direction as her preference. And of course with good reason. We both want a comfortable room. We each get private signals about whether the room is currently too cold or too warm. Our private signals are correlated. If her private signal suggests the room is too warm then she knows that more likely than not my private signal says the same thing, and since I am acting on my private signal and nothing more I am most likely adjusting the thermostat exactly how she wants it.

I know all of this and therefore I strictly prefer to say nothing about what I am doing. By saying nothing I allow her to continue to believe that I am doing what she wants me to do even though I don’t know for sure what it is she wants. Her signal of course could be different than mine. If instead I tell her I am raising the temperature then in the best case I am just confirming what she already believed and nothing is gained. But there is the risk that I inform her that actually I am doing the opposite of what she wants and then we have a conflict.

Her seminar was awesome.

There was 20 seconds left, Vanderbilt had just scored a layup to go ahead by 1 and Northwestern’s Bryant Mcintosh was racing to midcourt to set-up a final chance to regain the lead and win the game. Vanderbilt’s Matthew Fisher-Davis intentionally fouled him, sending McIntosh to the line and the commentators and all of social media into a state of bewilderment. Yes, we understand intentionally fouling when you are down 1 with 20 seconds to go, but when you are ahead by 1?

But it was a brilliant move and it failed only because the worst-case scenario (for Vanderbilt) realized: McIntosh made two clutch free throws and Vanderbilt did not score on the ensuing possession.

(Before we get into the analysis, a simple way to understand the logic of the play is to notice that intentionally fouling late in the game very often is the right strategic move when you are down by a few points and there is no reason that should change precipitously when the point differential goes from slightly negative to slightly positive.The tactic is based on a tradeoff between giving away (random) points and getting (for sure) possession. The factors in that tradeoff are continuous as a function of the current scoring margin.)

Let be the probability that a team scores (at least two points) on a possession. Let

be the probability that Bryant McIntosh makes a free throw. Roughly, the probability that Vanderbilt wins if they do not foul is

because Northwestern is going to play for the final shot and win if they make a field goal.

What is the probability that Vanderbilt wins when Fisher-Davis fouls? There are multiple, mutually-exclusive ways they could win. First, McIntosh might miss both free-throws. This happens with probability . The other simple case is McIntosh makes both free-throws, a probability

event, in which case Vanderbilt wins by scoring on the following possession, which they do with probability

. Thus, the total probability Vanderbilt wins in this second case is

.

The third possibility is McIntosh makes one free-throw. This has probability . (I am pretty sure McIntosh was shooting two, i.e. Northwestern was in the double bonus, but if it was a one-and-one this would make Fisher-Davis’ case even stronger.) Now there are two sub-cases. First, Vanderbilt could score on the ensuing and win. Second, even if they don’t score, it will be tied and the game will be sent into overtime. Let’s say Vanderbilt wins with probability

in overtime, a conservative number since Vanderbilt had all the momentum at that stage of the game.

Then the total probability of a Vanderbilt win in this third case is . Adding up all of these probabilities, Vanderbilt wins using the Fisher-Davis foul with probability

Fisher-Davis made the right move provided the above expression exceeds . Let’s start by noticing some basic properties. First, if

then fouling is always the right move, no matter what

is. (If Northwestern is going to score for sure, you want to foul and get possession so that you can score for sure and win.) If

then again fouling is the right strategy, regardless of

. (If he’s going to miss his free-throws then send him to the line.)

Next, notice that the probability Vanderbilt wins when Fisher-Davis fouls is monotonically increasing in . Since the probability

Vanderbilt wins without fouling is decreasing in

, the larger it is the better the Fisher-Davis gambit looks.

Finally, even if , so that McIntosh is surely going to sink two free-throws, Fisher-Davis made the right move as long as

.

Ok so what are the actual values of and

. McIntosh is an 85% free-throw shooter so

. Its harder to estimate

but here are some guidelines. First, both teams were scoring (at least two points) on just about every possession down the stretch of that game. An estimate based on the last 3 minutes of data would put

at at least

, in any case certainly larger than

.

More generally, I googled a bit and found something basketball stat guys call offensive efficiency. It’s an estimate of the number of points scored per possession. Northwestern and Vanderbilt have very similar numbers here, about 1.03. A crude way to translate that into the number we are interested, namely probability of at least 2 points in a possession, is to simply divide that number in half, again giving . (This would be exactly right if you could only ever score 2 points. But of course there are three-point possessions and one-point possessions.) A third way is to notice that Northwestern was shooting a 49% field goal percentage for the game. This doesn’t equal field goals per possession of course because some possessions lead to turnovers hence no field goal attempt, and on the other side some possessions lead to multiple field goal attempts due to offensive rebounds.

So as far as I know there isn’t one convincing measure of but its pretty reasonable to put it above

at that phase of the game. This would be enough to justify Fisher-Davis even if McIntosh was certain to make both free throws. (I used Wolfram Alpha to figure out what

would be required given the precise value

and it is about .45).

Finally, even if is below

say around

it means that the foul lowered Vanderbilt’s win probability but not by very much at all. Probably less than every single time in the game that someone missed a shot. Certainly less than a few seconds later when LaChance missed the winning shot on the final possession. Its interesting how in close games the specific things we focus our attention on when in fact pretty much every single play in the game turned out to be pivotal.

How do you assess whether a probabilistic forecast was successful? Put aside the question of sequential forecasts updated over time. That’s a puzzle in itself but on Monday night each forecaster will have its final probability estimate and there remains the question of deciding, on Wednesday morning, which one was “right.”

Give no credibility to pronouncements by, say 538, that they correctly forecasted X out of 50 states. According to 538’s own model these are not independent events. Indeed the distinctive feature of 538’s election model is that the statewide errors are highly correlated. That’s why they are putting Trump’s chances at 35% as of today when a forecast based on independence would put that probability closer to 1% based on the large number of states where Clinton has a significant (marginal) probability of winning.

So for 538 especially (but really for all the forecasters that assume even moderate correlation) Tuesday’s election is one data point. If I tell you the chance of a coin coming up Armageddon Tails is 35%, you toss it once and it comes up Tails you certainly have not proven me right.

The best we can do is set up a horserace among the many forecasters. The question is how do you decide which forecaster was “more right” based on Tuesday’s outcome? Of course if Trump wins then 538 was more right than every other forecaster but we do have more to go on than just the binary outcome.

Each forecaster’s model defines a probability distribution over electoral maps. Indeed they produce their estimates by simulating their models to generate that distribution and then just count the fraction of maps that come out with an Electoral win for Trump. The outcome on Tuesday will be a map. And we can ask based on that map who was more right.

What yardstick should be used? I propose maximum likelihood. Each forecaster on Monday night should publish their final forecasted distribution of maps. Then on Wednesday morning we ask which forecaster assigned the highest probability to the realized map.

That’s not the only way to do it of course, but (if you are listening 538, etc) whatever criterion they are going to use to decide whether their model was a success they should announce it in advance.

- Suppose one forecaster says the probability Trump wins is q and the other says the probability is p>q. If Trump in fact wins, who was “right?”

- Suppose one forecaster says the probability is q and the other says the probability is 100%. If Trump in fact wins, who was right?

- Suppose one forecaster said q in July and then revised to p in October. The other said q’ < q in July but then also revised to p in October. Who was right?

- Suppose one forecaster continually revised their probabilistic forecast then ultimately settled on p<1. The other forecaster steadfastly insisted the probability was 1 from beginning to end. Trump wins. Who was right?

- Suppose one forecaster’s probability estimates follow a martingale (as the laws of probability say that a true probability must do) and settles on a forecast of q. The other forecaster‘s “probability estimates” have a predictable trend and eventually settles on a forecast of q’>q. Trump wins. Who was right?

- Suppose there are infinitely many forecasters so that for every possible sequence of events there is at least one forecaster who predicted it with certainty. Is that forecaster right?

What I wrote yesterday:

When Fox broadcasts the Super Bowl they advertise for their shows, like American Idol. But those years in which, say, ABC has the Super Bowl you will never see an ad for American Idol during the Super Bowl broadcast.

This is that sort of puzzle whose degree of puzzliness is non-monotonic in how good your economic intuition is.

If you don’t think of it in economic terms at all it doesn’t seem at all like a puzzle. Try it: ask your grandpa if he thinks that its odd that you never see networks advertising their shows on other networks. Of course they don’t do that.

When you apply a little economics to it that’s when it starts to look like a puzzle. There is a price for advertising. The value of the ad is either higher or lower than the price. If its higher you advertise. If its another network that price is the cost of advertising. If its your own network that price is still a cost: the opportunity cost is the price you would earn if instead you sold the ad to a third-party. If it was worth it to advertise American Idol when your own network has the Super Bowl then it should be worth it when some other network has it too.

But a little more economics removes the puzzle. Networks have market power. The way to use that market power for profit is to artificially restrict quantity and set price above marginal cost. (The marginal cost of running another 30 second ad is the cost in terms of viewership that would come from shortening, say, the halftime show by 30 seconds.)

When a network chooses whether to run an ad for its own show on its own Super Bowl broadcast it compares the value of the ad to that marginal cost. When a network chooses whether to run an ad on another network’s Super Bowl broadcast it compares the value to the price.

Indeed even if the total time for ads is given and not under control of the network (i.e. total quantity is fixed) the profit maximizing price for ads will typically only sell a fraction of that ad time. Then the marginal (opportunity) cost of the additional ads to pad that time is zero and even very low value ads like for American Idol will be shown when Fox has the Super Bowl and not when any other network does.

In fact that last observation and the fact that you never ever see any network advertise its shows on another network tells us that the value of advertising television shows is very low. Perhaps that in fact tells us that the networks themselves understand (but their paying advertisers don’t) that the value of advertising in general is very low.

When Fox broadcasts the Super Bowl they advertise for their shows, like American Idol. But those years in which, say, ABC has the Super Bowl you will never see an ad for American Idol during the Super Bowl broadcast.

More generally, networks advertise their own shows on their own network but never pay to advertise their shows on other networks. I never understood this. But I think I finally figured it out, there’s some very simple economics behind this.

Right now at Primary.guide, you can read the current betting market odds for a “contested convention” and a “brokered convention.” The definitions are as follows. A contested convention means that no candidate has 1237 delegates by the end of the last primary. A brokered convention means that no candidate wins on the first ballot at the convention.

Right now the odds of a brokered convention are 50%. Note also that the odds of a Trump nomination are 50% as well. And Trump is the only candidate with any chance of winning a majority on the first ballot (even if he doesn’t get 1237 bound delegates he will be close and no other candidate could combine their bound delegates with unbound delegates to get to a majority.)

Thus, if there is no brokered convention Trump is the nominee. The probability of no brokered convention is 50%. Thus the entire 50% probability of a Trump nomination is accounted for by the event that he wins on the first ballot.

In other words there is zero probability, according to betting markets, that Trump wins a brokered convention.

The odds of a contested convention are 80%. That means that betting markets think there is a 30% chance Trump fails to get 1237 bound delegates but still wins on the first round. I.e. according to betting markets we have the following three mutually exclusive events:

- Trump gets to 1237 by June 7. 20% odds

- Trump fails to get 1237 bound delegates but wins on the first ballot. 30%

- Nobody wins on the first ballot and Trump is not the nominee. 50%

Cato Unbound is running a discussion with this topic. Alex Tabarrok and Tyler Cowen kicked things off by suggesting that technological advances are ending asymmetric information as an important feature of markets. My response, “Let’s Hope Not” was just published. Josh Gans and Shirley Svorny are also contributing.

Here is an article on the latest Michelin stars for Chicago Restaurants. The very nice thing about this article is that it tells you which restaurants just missed getting a star. As of yesterday you would have preferred the now-starred restaurants over the now-snubbed restaurants. But probably as of today that preference is reversed.

Any punishment designed for deterrence is based on the following calculation. The potential criminal weighs the benefit of the crime against the cost, where the cost is equal to the probability of being caught multiplied by the punishment if caught.

Taking surveillance technology as given, the punishment is set in order to calibrate the right-hand-side of that comparison. Optimally, the expected punishment equals the marginal social cost of the crime so that crimes whose marginal social cost outweighs the marginal benefit are deterred.

When technology allows improved surveillance, the law does not adjust automatically to keep the right-hand side constant. Indeed there is a ratchet effect in criminal law: penalties never go down.

So we naturally hate increased surveillance, even those of us who would welcome it in a first-best world where punishments adjust along with technology.

Suppose there’s a precedent that people don’t like. A case comes up and they are debating whether the precedent applies. Often the most effective way to argue against it is to cite previous cases where the precedent was applied and argue that the present case is different.

In order to maximally differentiate the current case they will exaggerate how appropriate the precedent was to the specific details of the previous case, even though they disagree with the precedent in principle because that case was already decided and nothing can be done about that now.

The long run effect of this is to solidify those cases as being good examples where the precedent applies and thereby solidify the precedent itself.

These are my thoughts and not those of Northwestern University, Northwestern Athletics, the Northwestern football team, nor of the Northwestern football players.

- As usual, the emergence of a unionization movement is the symptom of a problem rather than the cause. Also as usual, a union is likely to only make the problem worse.

- From a strategic point of view the NCAA has made a huge blunder in not making a few pre-emptive moves that would have removed all of the political momentum this movement might eventually have. Few in the general public are ever going to get behind the idea of paying college athletes. Many however will support the idea of giving college athletes long-term health insurance and guaranteeing scholarships to players who can no longer play due to injury. Eventually the NCAA will concede on at least those two dimensions. Waiting to be forced into it by a union or the threat of a union will only lead to a situation which is far worse for the NCAA in the long run.

- The personalities of Kain Colter and Northwestern football add to the interest in the case because as Rodger Sherman points out Northwestern treats its athletes better than just about any other university and Kain Colter is on record saying he loves Northwestern and his coaches. But these developments are bigger than the individuals involved. They stem from economic forces that were going to come to a head sooner or later anyway.

- Before taking sides, take the following line of thought for a spin. If today the NCAA lifted restrictions on player compensation, tomorrow all major athletic programs and their players would mutually, voluntarily enter into agreements where players were paid in some form or another in return for their commitment to the team. We know this because those programs are trying hard to do exactly that every single year. We call those efforts recruiting violations.

- Once that is understood it is clear that to support the NCAA’s position is to support restricting trade that its member schools and student athletes reveal year after year that they want very much. When you hear that universities oppose removing those restrictions you understand that whey they really oppose is removing those restrictions for their opponents. In other words, the NCAA is imposing a collusive arrangement because the NCAA has a claim to a significant portion of the rents from collusion.

- Therefore, in order to take a principled position against these developments you must point to some externality that makes this the exceptional case where collusion is justified.

- For sure, “Everyone will lose interest in college athletics once the players become true professionals” is a valid argument along these lines. Indeed it is easy to write down a model where paying players destroys the sport and yet the only equilibrium is all teams pay their players and the sport is destroyed.

- However, the statement in quotes above is almost surely false. Professional sports are pretty popular. And anyway this kind of argument is usually just a way to avoid thinking seriously about tradeoffs and incremental changes. For example, how many would lose interest in college athletics if tomorrow football players were given a 1% stake in total revenue from the sale of tickets to see them play?

- My summary of all this would be that there are clearly desirable compromises that could be found but the more entrenched the parties get the smaller will be the benefits of those compromises when they eventually, inevitably, happen.

I just saw Malcolm Gladwell on The Daily Show. Apparently his book David and Goliath is about how it can actually be an advantage to have some kind of disadvantage. He mentioned that a lot of really successful people are dyslexic for example.

But its either an absurdity or just a redefinition of terms to say that disadvantages can be advantageous. The evidence appears to be a case of sample selection bias. Here’s a simple model. Everyone chooses between two activities/technologies. There is a safe technology, think of it as wage labor, that pays a certain return to everybody except those the disadvantaged. The disadvantaged would earn a significantly lower return from the safe technology because of their disadvantage

Then there is another technology which is highly risky. Think of it as entrepreneurship. There is free entry but only a randomly selected tiny fraction of entrants succeed and earn returns exceeding the safe technology. Everyone else fails and earns nothing. Free entry means that the expected return (or utility thereof) must be lower than the safe technology else all the advantaged would abandon the latter.

The disadvantaged take risks because of their disadvantage and a small fraction of them succeed. All of the highly successful people have “advantageous” disadvantages.

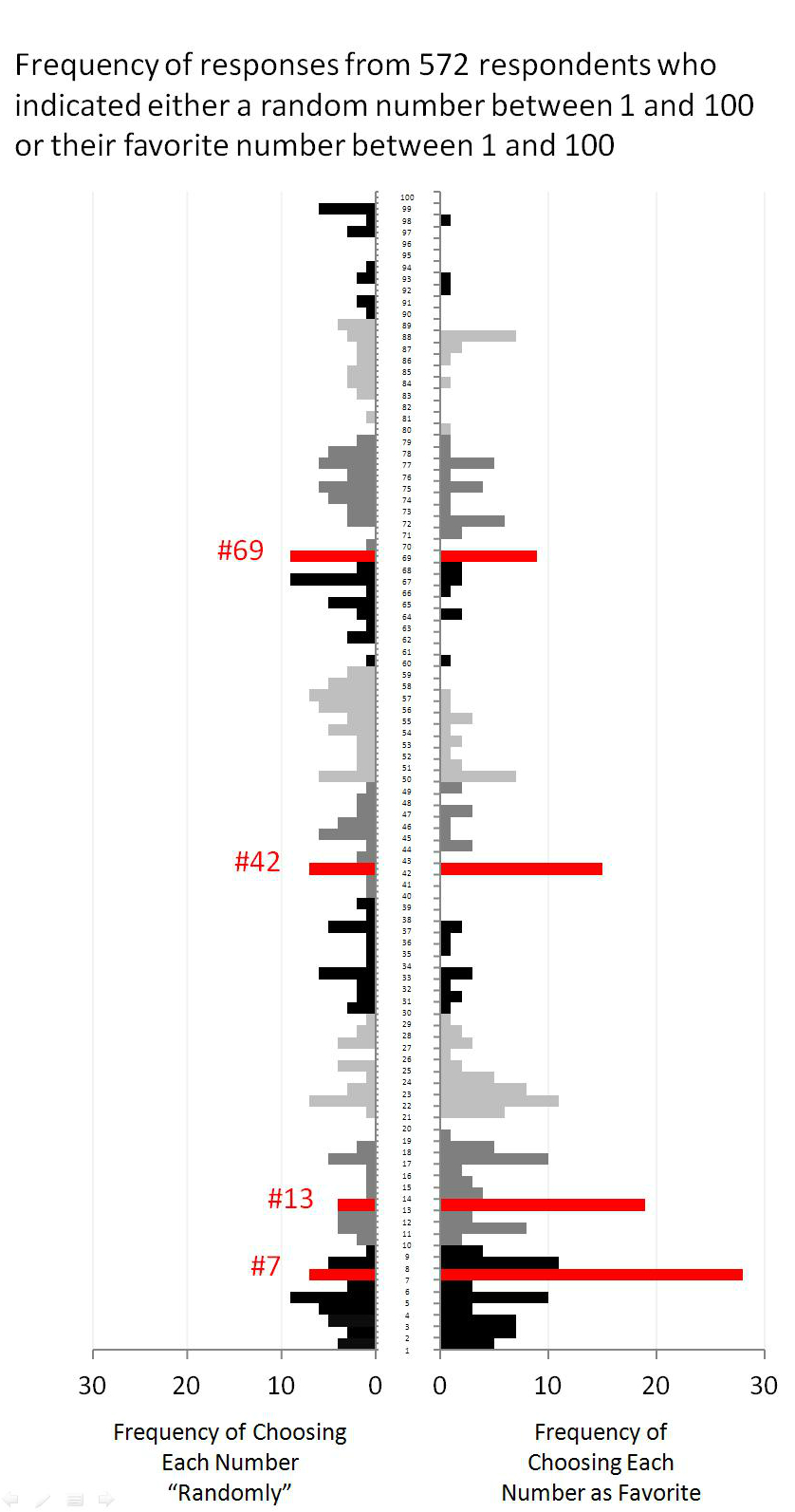

Some people were asked to name their favorite number, others were asked to give a random number:

More here. Via Justin Wolfers.

I liked this account very much:

there are two ways of changing the rate of mismatches. The best way is to alter your sensitivity to the thing you are trying to detect. This would mean setting your phone to a stronger vibration, or maybe placing your phone next to a more sensitive part of your body. (Don’t do both or people will look at you funny.) The second option is to shift your bias so that you are more or less likely to conclude “it’s ringing”, regardless of whether it really is.

Of course, there’s a trade-off to be made. If you don’t mind making more false alarms, you can avoid making so many misses. In other words, you can make sure that you always notice when your phone is ringing, but only at the cost of experiencing more phantom vibrations.

These two features of a perceiving system – sensitivity and bias – are always present and independent of each other. The more sensitive a system is the better, because it is more able to discriminate between true states of the world. But bias doesn’t have an obvious optimum. The appropriate level of bias depends on the relative costs and benefits of different matches and mismatches.

What does that mean in terms of your phone? We can assume that people like to notice when their phone is ringing, and that most people hate missing a call. This means their perceptual systems have adjusted their bias to a level that makes misses unlikely. The unavoidable cost is a raised likelihood of false alarms – of phantom phone vibrations. Sure enough, the same study that reported phantom phone vibrations among nearly 80% of the population also found that these types of mismatches were particularly common among people who scored highest on a novelty-seeking personality test. These people place the highest cost on missing an exciting call.

From Mind Hacks.

It doesn’t make sense that exercise is good for you. Its just unnecessary wear and tear on your body. Take the analogy of a car. Would it make sense to take it out for a drive up and down the block just to “exercise” it? Your car will survive for only so many miles and you are wasting them with exercise.

But exercise is supposed to pay off in the long run. Sure you are wasting resources and subjecting your body to potential injury by exercising but if you survive the exercise you will be stronger as a result. Still this is hard to understand. Because its your own body that is making itself stronger. Your body is re-allocating resources away from some other use in order to build muscles. If that’s such a good thing to do why doesn’t your body just do it anyway? Why do you first have to weaken yourself and risk injury before your body begrudgingly does this thing that it should have done in the first place?

It must be an agency issue. Your body can either invest resources in making you stronger or use them for something else. The problem for your body is knowing which to do, i.e. when the environment is such that the investment will pay off. The physiological processes evolved over too long and old a time frame for them to be well-tuned to the minute changes in the environment that determine when the investment is a good one. Your body needs a credible signal.

Physical exercise is that signal. Before people started doing it for fun, more physical activity meant that your body was in a demanding environment and therefore one in which the rewards from a stronger body are greater. So the body optimally responds to increased exercise by making itself stronger.

Under this theory, people who jog or cycle or play sports just to “stay fit” are actually making themselves less healthy overall. True they get stronger bodies but this comes at the expense of something else and also entails risk. The diversion of resources and increased risk are worth it only when the exercise signals real value from physical fitness.

My friend and Berkeley grad school classmate Gary Charness posted this on Facebook:

It has finally happened. This could be a world record. I now have 63 published and accepted papers at the age of 63. I doubt that there is anyone who *first* matched their (positive) age at a higher age. Not bad given that my first accepted paper was in 1999. I am very pleased !!

Note that Gary is setting a very strict test here. Draw a graph with age on the horizontal axis and publications on the vertical. Take any economist and plot publications by age. It’s already a major accomplishment for this plot to cross the 45 degree line at some point. Its yet another for it to still be above the 45 degree line at age 63. But its absolutely astounding that Gary’s plot first crossed the 45 degree line at age 63.

(Yes Gary was my classmate at Berkeley when I was 20-something and he was 40-something.)

The less you like talking on the phone the more phone calls you should make. Assuming you are polite.

Unless the time of the call was pre-arranged the person placing the call is always going to have more time to talk than the person receiving the call simply because the caller is the one making the call. So if you receive a call but you are too polite to make an excuse to hang up you are going to be stuck talking for a while.

So in order to avoid talking on the phone you should always be the one making the call. Try to time it carefully. It shouldn’t be at a time when your friend is completely unavailable to take your call because then you will have to leave a voicemail and he will eventually call you back when he has plenty of time to have a nice long conversation.

Ideally you want to catch your friend when they are just flexible enough to answer the phone but too busy to talk for very long. That way you meet your weekly quota of phone calls at minimum cost in terms of time actually spent on the phone. What could be more polite?

Matthew Rabin was here last week presenting his work with Erik Eyster about social learning. The most memorable theme of their their papers is what they call “anti-imitation.” It’s the subtle incentive to do the opposite of someone in your social network even if you have the same preferences and there are no direct strategic effects.

You are probably familiar with the usual herding logic. People in your social network have private information about the relative payoff of various actions. You see their actions but not their information. If their action reveals they have strong information in favor of it you should copy them even if you have private information that suggests doing the opposite.

Most people who know this logic probably equate social learning with imitation and eventual herding. But Eyster and Rabin show that the same social learning logic very often prescribes doing the opposite of people in your social network. Here is a simple intuition. Start with a different, but simpler problem. Suppose that your friend makes an investment and his level of investment reveals how optimistic he is. His level of optimism is determined by two things, his prior belief and any private information he received.

You don’t care about his prior, it doesn’t convey any information that’s useful to you but you do want to know what information he got. The problem is the prior and the information are entangled together and just by observing his investment you can’t tease out whether he is optimistic because he was optimistic a priori or because he got some bullish information.

Notice that if somebody comes and tells you that his prior was very bullish this will lead you to downgrade your own level of optimism. Because holding his final beliefs fixed, the more optimistic was his prior the less optimistic must have been his new information and its that new information that matters for your beliefs. You want to do the opposite of his prior.

This is the basic force behind anti-imitation. (By the way I found it interesting that the English language doesn’t seem to have a handy non-prefixed word that means “doing the opposite of.”) Suppose now your friend got his prior beliefs from observing his friend. And now you see not only your friend’s investment level but his friend’s too. You have an incentive to do the opposite of his friend for exactly the same reason as above.

This assumes his friend’s action conveys no information of direct relevance for your own decision. And that leads to the prelim question. Consider a standard herding model where agents move in sequence first observing a private signal and then acting. But add the following twist. Each agent’s signal is relevant only for his action and the action of the very next agent in line. Agent 3 is like you in the example above. He wants to anti-imitate agent 1. But what about agents 4,5,6, etc?

If you are like me and you believe that thinking is better path to success than not thinking, its hard not to take it personally when an athlete or other performer who is choking is said to be “overthinking it.” He needs to get “untracked.” And if he does and reaches peak performance he is said to be “unconscious.”

There are experiments that seem to confirm the idea that too much thinking harms performance. But here’s a model in which thinking always improves performance and which is still consistent with the empirical observation that thinking is negatively correlated with performance.

In any activity we rely on two systems: one which is conscious, deliberative and requires “thinking.” The other is instinctive. Using the deliberative system always gives better results but the deliberation requires the scarce resource of our moment-to-moment attention. So for any sufficiently complex activity we have to ration the limited capacity of the deliberative system and offload many aspects of performance to pre-programmed instincts.

But for most activities we are not born with an instinctive knowledge how to do it. What we call “training” is endless rehearsal of an activity which establishes that instinct. With enough training, when circumstances demand we can offload the activity to the instinctive system in order to conserve precious deliberation for whatever novelties we are facing which truly require original thinking.

An athlete or performer who has been unsettled, unnerved, or otherwise knocked out of his rhythm finds that his instinctive system is failing him. The wind is playing tricks with his toss and so his serve is falling apart. Fortunately for him he can start focusing his attention on his toss and his serve and this will help. He will serve better as a result of overthinking his serve.

But there is no free lunch. The shock to his performance has required him to allocate more than usual of his deliberative resources to his serve and therefore he has less available for other things. He is overthinking his serve and as a result his overall performance must suffer.

(Conversation with Scott Ogawa.)

I coach my daughter’s U12 travel soccer team. An important skill that a player of this age should be picking up is the instinct to keep her head up when receiving a pass, survey the landscape and plan what to do with the ball before it gets to her feet. The game has just gotten fast enough that if she tries to do all that after the ball has already arrived she will be smothered before there is a chance.

Many drills are designed to train this instinct and today I invented a little drill that we worked on in the warmups before our game against our rivals from Deerfield, Illinois. The drill makes novel use of a trick from game theory called a jointly controlled lottery.

Imagine I am standing at midfield with a bunch of soccer balls and the players are in a single-file line facing me just outside of the penatly area. I want to feed them the ball and have them decide as the ball approaches whether they are going to clear it to my left or to my right. In a game situation, that decision is going to be dictated by the position of their teammates and opponents on the field. But since this is just a pre-game warmup we don’t have that. I could try to emulate it if I had some kind of signaling device on either flank and a system for randomly illuminating one of the signals just after I delivered the feed. The player would clear to the side with the signal on.

But I don’t have that either and anyway that’s too easy and quick to read to be a good simulation of the kind of decision a player makes in a game. So here’s where the jointly controlled lottery comes in. I have two players volunteer to stand on either side of me to receive the clearing pass. Just as I deliver the ball to the player in line the two girls simultaneously and randomly raise either one hand or two. The player receiving the feed must add up the total number of hands raised and if that number is odd clear the ball to the player on my left and if it is even clear to the player on my right.

The two girls are jointly controlling a randomization device. The parity of the number of hands is not under the control of either player. And if each player knows that the other is choosing one or two hands with 50-50 probability, then each player knows that the parity of the total will be uniformly distributed no matter how that individual player decides to randomize her own hands.

And the nice thing about the jointly controlled lottery in this application is that the player receiving the feed must look left, look right, and think before the ball reaches her in order to be able to make the right decision as soon as it reaches her feet.

We beat Deerfield 3-0.