You are currently browsing the category archive for the ‘Uncategorized’ category.

Some time ago I had half-written a post calling for a Nobel prize for Al Roth. It was after he gave his Nancy Schwartz lecture at Kellogg and I decided not to publish it because I thought maybe it was just a little too soon. Not too soon to get the prize but too soon to expect the Nobel folks to give it to him. I am glad I was wrong.

Don’t forget his very important co-authors Tayfun Sonmez, Attila Abdulkadiroglu, and Utku Unver. These guys, Tayfun especially, were still working on matching theory when nobody else was interested and before all the practical applications (mainly coming out of their collaboration with Al) started to attract attention.

This is a time for microeconomics to celebrate. When you are on a plane and you tell the person next to you that you’re an economist, they ask you about interest rates. Everyone instinctively equates economics with macroeconomics. And that’s probably because most people have the impression that macroeconomics is where economists have the biggest impact.

But actually microeconomic theory has already had a bigger impact on your life that macroeconomic theory ever will. And there’s no politics tangled up in microeconomics. When you meet a microeconomic theorist it never once occurs to you to check the saline content of their nearest body of water.

There are no fundamental disagreements about basic principles of microeconomics. And I would say that Al Roth epitomizes what’s great about microeconomics. He has no “field:” he does classical game theory/bargaining theory and he does behavioral economics. He does theory and experiments. He theorizes about market design and he actually designs markets.

Al is the second blogger to win a Nobel prize. Compare their fields and their blogs.

I never met Shapley and I only saw him give a couple talks when he was already way past his prime. But gappy3000 reminds me that he and John Nash invented a game called Fuck Your Buddy. So that’s something. And now he has a Nobel Prize. And of course without his work there would be no prize for Roth either. David Gale should have shared the prize but he died a few years ago.

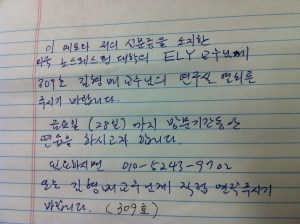

Temporary parking sign spotted near the Stanford GSB/Economics department by Michael Ostrovsky (via Google+)

In a now annual event, economists at Kellogg and the NU Econ Dept have been polled for their forecasts of who will win the Econ Nobel Prize next Monday. Jeff and I forgot to vote for each other but the rest of the predictions are below (the full write up is on the Kellogg Expertly Wrapped blog):

The Thomson-Reuters citation based predictions are Steve Ross, Tony Atkinson and Angus Deaton, and Robert Shiller.

NU and Kellogg have a large group of IO specialists and theorists. There is also a rich tradition in innovative work in incentive theory – Myerson, Holmstrom and Milgrom were at Kellogg in my department and did some of their best work here in the early 1980s.

So, I am somewhat discounting the latter three candidates with one caveat below. Tirole is also a perennial favorite given the IO bias. While I think all these researchers will get this prize eventually, their age works against them – they are too young. they did seminal work at a time when Duran Duran ruled the airwaves or perhaps the Smiths in the case of Tirole. The Nobel Committee is still sorting out the time when ABBA was Number One and Bjorn Borg won Wimbledon. (Note Swedish influence on pop culture was high in the 1970s!)

Surely there is the odd foray into the 1980s when a field has been overlooked (e.g. Krugman for new trade theory). Mechanism design, incentives have won several times so this works against them. But one might argue Tirole is the Krugman of IO (or that Krugman is the Tirole of trade given that new trade theory used oligopoly models!).

So, that brings Tirole back in as a prediction.

The age factor also means Bob Wilson, an intellectual hero for all of us who do economic theory, is more likely than others on this list. Holmstrom and Milgrom (and Roth?) are his students and they were inspired by his tutelage and research agenda. He also worked with David Kreps. So, some prize organized around Wilson might be another possibility.

I am completely discounting the Thomson-Reuters predictions because (1) finance people can’t get the prize so soon after the financial crisis even if they do behavioral fiance like Shiller and (2) there was a recent Growth and Development Nobel Symposium and it is too soon to give a prize to Deaton or Atkinson after that – the Committee needs to think it over.

I personally think the prize will go to Econometrics because there hasn’t been one since Engel and Granger. I have no expertize in the field so I won’t hazard a guess.

The abstract:

This is a line-by-line analysis of the second verse of 99 Problems by Jay-Z, from the perspective of a criminal procedure professor. It’s intended as a resource for law students and teachers, and for anyone who’s interested in what pop culture gets right about criminal justice, and what it gets wrong.

For example:

E. Line 7

So I pull over . . . At this point, Jay-Z has been seized, for purposes of Fourth Amendment analysis, because he has submitted to a show of police authority. He has thus preserved his Fourth Amendment claims. If you are stopped illegally and want to fight it later, you have to submit to the show of authority. In this case, if the police find the contraband, he’ll be able to challenge it in court. Smart decision here by Jay-Z.

The full paper is here, I thank the organic Troy Kravitz for the link.

You are planning a nice dinner and are shopping for the necessary groceries. After having already passed the green onions you are reminded that you actually need green onions upon discovering exactly that vegetable, in a bunch, bagged, and apparently abandoned by another shopper. Do you grab the bag before you or turn around and go out of your way to select your own bunch?

- This bag was selected already, and from a weakly larger supply. It is therefore likely to be better than the best you will find there now.

- On the other hand, it was abandoned. You have to ask yourself why.

- You would worry if the typical shopper’s strategy is to select a bag at random and then only carefully inspect it later. Because then it was abandoned because of some defect.

- But this a red herring. Whatever she could see wrong with the onions you can see too. The only asymmetry of information between you and your pre-shopper is about the unchosen onions. The selection effect works unambiguoulsy in favor of the scallions-in-hand.

- You can gain information based on where the onions were abandoned.

- First of all the fact that they were abandoned somewhere other than the main pile of onions reveals that she was not rejecting these in favor of other onions. If so, since she was going back to the onion pile she would have brought these with her. Instead she probably realized that she didn’t need the onions after all. So again, no negative signal.

- If these bunched green onions were abandoned in front of the loose green onions or the leeks or ramps, then this is an even better signal. She thought these were the best among the green onions but later discovered an even better ingredient. A sign she has discerning tastes.

- It is true though that compared to a randomly selected new bunch, these have been touched by on average one additional pair of human hands.

- And also she might be trying to poison you.

- But if she was trying to poison someone, is it her optimal strategy to put the poisoned onions into a bag and abandon them in a neighboring aisle?

- In equilibrium all bunches are equally likely to be poisoned and the bagging and abandoning ploy amounts to nothing more than cheap talk.

- But, she might not be trying to poison just any old person. She might really be targeting you, the guy who wants the best bunch of onions in the store.

- Therefore these onions are either logically the best onions in the store and therefore poisoned, or they are worse than some onions back in the big pile but then those are poisoned.

- Opt for take-out.

A great argument by Acemoglu and Robinson:

Another aspect is the divide between what the academic research in economics does — or is supposed to do — and the general commentary on economics in newspapers or in the blogosphere. When one writes a blog, a newspaper column or a general commentary on economic and policy matters, this often distills well-understood and broadly-accepted notions in economics and draws its implications for a particular topic. In original academic research (especially theoretical research), the point is not so much to apply already accepted notions in a slightly different context or draw their implications for recent policy debates, but to draw new parallels between apparently disparate topics or propositions, and potentially ask new questions in a way that changes some part of an academic debate. For this reason, simplified models that lead to “counterintuitive” (read unexpected) conclusions are particularly valuable; they sometimes make both the writer and the reader think about the problem in a total of different manner (of course the qualifier “sometimes” is important here; sometimes they just fall flat on their face). And because in this type of research the objective is not to construct a model that is faithful to reality but to develop ideas in the most transparent and simplest fashion; realism is not what we often strive for (this contrasts with other types of exercises, where one builds a model for quantitative exercise in which case capturing certain salient aspects of the problem at hand becomes particularly important). Though this is the bread and butter of academic economics, it is often missed by non-economists.

The Obama campaign claims that the Romney tax plan would result in an increase in taxes on the middle class, if you take it at its word that it would be revenue neutral. I took a look at an analysis done by the Tax Policy Center to get a more objective view. One of the authors, William Gale, worked in the CEA during the Bush I administration and another, Adam Looney, during the Obama administration. Their description of the Romney plan is:

This plan would extend the 2001-03 tax cuts, reduce individual income tax rates by 20 percent, eliminate taxation of investment income of most taxpayers (including individuals earning less than $100,000, and married couples earning less than $200,000), eliminate the estate tax, reduce the corporate income tax rate, and repeal the alternative minimum tax (AMT) and the high-income taxes enacted in 2010’s health-reform legislation.

Their preliminary conclusion:

We estimate that these components would reduce revenues by $456 billion in 2015 relative to a current policy baseline.

Therefore, over ten years this comes to 4.56 trillion. The Obama estimate seems to add in interest payments to round it out to 5 trillion dollars.

Since the Romney plan is meant to be revenue neutral where is this money coming from? First, some of it is recouped by eliminating various corporate tax breaks. Second, if the tax changes trigger greater growth this would generate tax revenue. Third it could come from closing other tax breaks on individuals. Once the TPC analysis accounts for the first two factors, they come up with a figure of $360 billion per annum of reduction in federal revenue from reducing income tax and eliminating the estate tax. This favors the rich. Even if deductions like mortgage interest tax deduction, tax free health insurance tax are adjusted or eliminated to raise revenue, this still leaves a hole of $86 billion/annum to be raised by increasing taxes on low and middle income taxpayers. The precise definition of middle class is a matter of debate. The TPC uses incomes below 200k and Marty Feldstein uses incomes below 100k and looks at 2009 data not a 2015 forecast like the TPC. There are other differences between the calculations but they are really not inconsistent: tax deductions would have to be eliminated to make up for the tax cuts. In Feldstein’s analysis, the burden falls on those with incomes between $100-200k.

The TPC does a robustness test on its growth estimates. If you use growth rates proposed by Romney advisor Greg Mankiw, federal revenue would fall by $307 billion still leaving $33 to be covered by people making less that $200k/annum.

But there are two points one can add which are implicit in the TPC analysis.

If you cut taxes but then eliminate deductions, there is no tax cut in aggregate – you give with one hand and take with the other. This means the impact on the work/leisure tradeoff is minimal, hence so is the impact on economic incentives and hence so is the impact on economic growth. Hence, the main impact of the Romney tax plan is distributional along the lines suggested by the TPC. A nice clear article from the American Enterprise Institute explains why reducing taxes while eliminating deductions does not have much effect on work incentives.

More dramatically, if the Romney plan gives with one hand and takes with the other, his whole economic plan collapses. It is founded on having tax cuts and triggering trickle down. But there is no real tax cut as deductions are eliminated at the same time taxes are cut. Hence, there is no Romney tax cut plan to stimulate growth.

Updates: Linked to TPC article and also an AEI article about revenue neutral tax policy.

On the definitions of Pareto efficiency and surplus maximization and their connection. I have also updated my slides for this lecture, presenting things in a different order in a way that I think makes a bigger impact. You can find them here.

[vimeo 50833662 w=500&h=280]When you grade exams in a large class you inevitably face the misunderstood question dilemma. A student has given a correct answer to a question but not the question you asked. As an answer to the question you asked it is flat out wrong. How much credit should you give?

It should not be zero. You can make this argument at two levels. First, ex post, the student’s answer reveals some understanding. To award zero points would be to equate this with writing nothing at all. That’s unfair.

You might respond by saying, tough luck, it is my policy not to reward misunderstanding the question. But even ex ante it is optimal to commit to a policy which gives at least partial credit to fortuitous misunderstanding. The only additional constraint at the ex ante stage is incentive compatibility. You don’t want to reward a student who interprets the question in a way that makes it easier and then supplies a correct answer to the easier question.

But you should reward a student who invents a harder question and answers that. And you should make it known in advance that you will do so. Indeed, taken to its limit, the optimal exam policy is to instruct the students to make up their own question and answer it, with harder questions (correctly answered) worth more than easier ones.

Incidentally I was once in a class where a certain professor asked exactly such a question.

I wrote about it here. I had a look at the video and it was the right call given the rule, but as I argued in the original post the rule is an unnecessary kludge. At best, it does nothing (in equilibrium.)

- Here’s another video of a cool and educational thing to do with your kids for you to bookmark for later but never actually do.

- I can name a few people who really are this obsessed with font spacing.

- I don’t even want to guess what you’re supposed to do in the one with the tandem bidet.

- The Shining with a laugh track and Seinfeld bumper music.

From an interview in Rolling Stone:

Oh, yeah, in folk and jazz, quotation is a rich and enriching tradition. That certainly is true. It’s true for everybody, but me. There are different rules for me. And as far as Henry Timrod is concerned, have you even heard of him? Who’s been reading him lately? And who’s pushed him to the forefront? Who’s been making you read him? And ask his descendants what they think of the hoopla. And if you think it’s so easy to quote him and it can help your work, do it yourself and see how far you can get. Wussies and pussies complain about that stuff. It’s an old thing – it’s part of the tradition. It goes way back. These are the same people that tried to pin the name Judas on me. Judas, the most hated name in human history! If you think you’ve been called a bad name, try to work your way out from under that. Yeah, and for what? For playing an electric guitar? As if that is in some kind of way equitable to betraying our Lord and delivering him up to be crucified. All those evil motherfuckers can rot in hell.

Read Gary Shteyngart’s painfully comic post-mortem following a surreal transatlantic flight on American Airlines:

At Heathrow, fire trucks met us because we landed “heavy,” i.e., still full of fuel we never got to spend over the Atlantic. At the terminal, a woman in a spiffy red American Airlines blazer was sent to greet us. But the language she spoke — Martian — was not easily understood, versed as we were in Spanish, English, Russian and Urdu.

Using her Martian language skills, the American Airlines woman proposed to take us “through the border” at Heathrow, for a night of rest before we resumed our journey the next morning. An apocalyptic scenario: an employee of the world’s worst airline assigned to the world’s worst border crossing at the world’s worst airport.

The Martian took us to one immigration lane, which promptly closed. Then another, with the same result. A third, ditto. Despite her blazer, the Martian was obviously not the ally we had made her out to be. So, ducking under security ropes, knocking some down entirely, we rushed the border with our passports held aloft, proclaiming ourselves the citizens of a fading superpower.

There seems to be something going on at American Airlines. As a part of bankruptcy proceedings they are trying to get concessions from the pilot’s union. The pilots appear to have found a clever way to fight back: obey the letter of the contract and in so doing violate its spirit with extreme prejudice:

Long story short, American is totally screwed. What management is discovering right now is that formal contracts can’t fully specify what it is that “doing your job properly” constitutes for an airline pilot. The smooth operation of an airline requires the active cooperation of skilled pilots who are capable of judging when it does and doesn’t make sense to request new parts and who conduct themselves in the spirit of wanting the airline to succeed. By having the judge throw out the pilots’ contract, the airline has totally lost faith with its pilots and has no ability to run the airline properly. It’s still perfectly safe, but if your goal is to get to your destination on time, you simply can’t fly American. The airline is writing checks it can’t cash when it tells you when your flights will be taking off and landing.

Taqiyah tap: Mallesh Pai

Its the same reason the lane going in the opposite direction is always flowing faster. This is a lovely article that works through the logic of conditional proportions. I really admire this kind of lucid writing about subtle ideas. (link fixed now, sorry.)

This phenomenon has been called the friendship paradox. Its explanation hinges on a numerical pattern — a particular kind of “weighted average” — that comes up in many other situations. Understanding that pattern will help you feel better about some of life’s little annoyances.

For example, imagine going to the gym. When you look around, does it seem that just about everybody there is in better shape than you are? Well, you’re probably right. But that’s inevitable and nothing to feel ashamed of. If you’re an average gym member, that’s exactly what you should expect to see, because the people sweating and grunting around you are not average. They’re the types who spend time at the gym, which is why you’re seeing them there in the first place. The couch potatoes are snoozing at home where you can’t count them. In other words, your sample of the gym’s membership is not representative. It’s biased toward gym rats.

- Is it that women like to socialize more than men do or is it that everyone, men and women alike, prefers to socialize with women?

- A great way to test for strategic effort in sports would be to measure the decibel level of Maria Sharapova’s grunts at various points in a match.

- If you are browsing the New York Times and you are over your article limit for the month, hit the stop button just after the page renders but before the browser has a chance to load the “Please subscribe” overlay. This is easy on slow browsers like your phone.

- Given the Archimedes Principle why do we think that the sea level will rise when the Polar Caps melt?

Nate Silver’s 538 Election Forecast has consistently given Obama a higher re-election probability than InTrade does. The 538 forecast is based on estimating vote probabilities from State polls and simulating the Electoral College. InTrade is just a betting market where Obama’s re-election probability is equated with the market price of a security that pays off $1 in the event that Obama wins. How can we decide which is the more accurate forecast? When you log on in the morning and see that InTrade has Obama at 70% and Nate Silver has him at 80%, on what basis can we say that one of them is right and the other is wrong?

At a philosophical level we can say they are both wrong. Either Obama is going to win or Romney is going to win so the only correct forecast would give one of them 100% chance of winning. Slightly less philosophically, is there any interpretation of the concept of “probability” relative to which we can judge these two forecasting methods?

One way is to define probability simply as the odds at which you would be indifferent between betting one way or the other. InTrade is meant to be the ideal forecast according to this interpretation because of course you can actually go and bet there. If you are not there betting right now then we can infer you agree with the odds. One reason among many to be unsatisfied with this conclusion is that there are many other betting sites where the odds are dramatically different.

Then there’s the Frequentist interpretation. Based on all the information we have (especially polls) if this situation were repeated in a series of similar elections, what fraction of those elections would eventually come out in Obama’s favor? Nate Silver is trying to do something like this. But there is never going to be anything close to enough data to be able to test whether his model is getting the right frequency.

Nevertheless, there is a way to assess any forecasting method that doesn’t require you to buy into any particular interpretation of probability. Because however you interpret it, mathematically a probability estimate has to satisfy some basic laws. For a process like an election where information arrives over time about an event to be resolved later, one of these laws is called the Martingale property.

The Martingale property says this. Suppose you checked the forecast in the morning and it said Obama 70%. And then you sit down to check the updated forecast in the evening. Before you check you don’t know exactly how its going to be revised. Sometimes it gets revised upward, sometimes downard. Soometimes by a lot, sometimes just a little. But if the forecast is truly a probability then on average it doesn’t change at all. Statistically we should see that the average forecast in the evening equals the actual forecast in the morning.

We can be pretty confident that Nate Silver’s 538 forecast would fail this test. That’s because of how it works. It looks at polls and estimates vote shares based on that information. It is an entirely backward-looking model. If there are any trends in the polls that are discernible from data these trends will systematically reflect themselves in the daily forecast and that would violate the Martingale property. (There is some trendline adjustment but this is used to adjust older polls to estimate current standing. And there is some forward looking adjustment but this focuses on undecided voters and is based on general trends. The full methodology is described here.)

In order to avoid this problem, Nate Silver would have to do the following. Each day prior to the election his model should forecast what the model is going to say tomorrow, based on all of the available information today (think about that for a moment.) He is surely not doing that.

So 70% is not a probability no matter how you prefer to interpret that word. What does it mean then? Mechanically speaking its the number that comes out of a formula that combines a large body of recent polling data in complicated ways. It is probably monotonic in the sense that when the average poll is more favorable for Obama then a higher number comes out. That makes it a useful summary statistic. It means that if today his number is 70% and yesterday it was 69% you can logically conclude that his polls have gotten better in some aggregate sense.

But to really make the point about the difference between a simple barometer like that and a true probability, imagine taking Nate Silver’s forecast, writing it as a decimal (70% = 0.7) and then squaring it. You still get a “percentage,” but its a completely different number. Still its a perfectly valid barometer: its monotonic. By contrast, for a probability the actual number has meaning beyond the fact that it goes up or down.

What about InTrade? Well, if the market it efficient then it must be a Martingale. If not, then it would be possible to predict the day-to-day drift in the share price and earn arbitrage profits. On the other hand the market is clearly not efficient because the profits from arbitraging the different prices at BetFair and InTrade have been sitting there on the table for weeks.

The excellent people ant NUIT have helped me put together a series of small videos that complement my Microeconomic Theory course. I start teaching today and I will be posting the videos here as the course progresses. You can find my slides here and eventually all the videos will be there too, organized by lecture. These videos are 5-10 minutes each and are meant to be high-level synopses of the main themes of each lecture. The slides as well as the videos are released to the public domain under Creative Commons (non-commercial, attribution, share-alike) licenses. The first video is on Welfare Economics and features figure skating.

The eternal Kevin Bryan writes to me:

Consider an NFL team down 15 who scores very late in the game, as happened twice this weekend. Everybody kicks the extra point in that situation instead of going for two, and is then down 8. But there is no conceivable “value of information” model that can account for this – you are just delaying the resolution of uncertainty (since you will go for two after the next touchdown). Strange indeed.

Let me restate his puzzle. If you are in a contest and success requires costly effort, you want to know the return on effort in order to make the most informed decision. In the situation he describes if you go for the 2-pointer after the first touchdown you will learn something about the return on future effort. If you make the 2 points you will know that another touchdown could win the game. If you fail you will know that you are better off saving your effort (avoiding the risk of injury, getting backups some playing time, etc.)

If instead you kick the extra point and wait until a second touchdown before going for two there is a chance that all that effort is wasted. Avoiding that wasted effort is the value of information.

The upshot is that a decision-maker always wants information to be revealed as soon as possible. But in football there is a separation between management and labor. The coach calls the plays but the players spend the effort. The coach internalizes some but not all of the players’ cost of effort. This can make the value of information negative.

Suppose that both the coach and the players want maximum effort whenever the probability of winning is above some threshold, and no effort when its below. Because the coach internalizes less of the cost of effort, his threshold is lower. That is, if the probability of winning falls into the intermediate range below the players’ threshold and above the coach’s threshold, the coach still wants effort from them but the players give up. Finally, suppose that after the first touchdown the probability of winning is above both thresholds.

Then the coach will optimally choose to delay the resolution of uncertainty. Because going for two is either going to move the probability up or down. Moving it up has no effect since the players are already giving maximum effort. Moving it down runs the risk of it landing in that intermediate area where the players and coach have conflicting incentives. Instead by taking the extra point the coach gets maximum effort for sure.

Ethan Iverson has an excellent series of posts on the ironically-named Thelonious Monk Piano Competitions and the incentives, perverse and otherwise, they create.

My argument against competitions is basically same thing. To my ears, there had been an astonishing amount of agreement about what jazz really is in most youthful swinging jazz since 1990. That agreement was one reason I rebelled against it. I just couldn’t see it as the jazz tradition — not my jazz tradition, anyway. I was delighted to be lifted out of the discussion entirely by Reid Anderson and David King in 2001.

It is crucial to remember that my writing on DTM reflects my own experience, passions, and blind spots. On Twitter and in the forum, several competition veterans said they played exactly how they wanted to play, in a non-conventional manner, and won anyway.

Kudos. I could have never won a competition. Indeed, my joke about playing “Confirmation” in front of Carl Allen was loaded with my own fears. Even though I’ve recorded “Confirmation” twice, with Billy Hart and Tootie Heath, I still wouldn’t want to play that in front of a bebop jury. Forget it! You couldn’t pay me enough.

I would push him on the basic economics: as long as there is a scarcity of gigs there will be competition in some form. Is it better for that competition to be formalized or to play out in the market alone? If winners gain notoriety and then gigs, and if judges reflect the preferences of audiences then formal competitions can save a lot of rent-seeking. I suppose the more cynical take is that judges have arbitrary standards and winning a contest merely turns the winner into a focal point around which venues and audiences coordinate attention. But if audiences’ tastes are that malleable is this really a loss?

You must watch Balasz Szentes’ talk at the Becker Friedman Institute. At the very least, watch up until about 7:00. You will not regret it. (Note that Gary Becker was sitting in the front row.)

In his speech to the Clinton Global Initiative:

When I was in business, I traveled to many other countries. I was often struck by the vast difference in wealth among nations. True, some of that was due to geography. Rich countries often had natural resources like mineral deposits or ample waterways. But in some cases, all that separated a rich country from a poor one was a faint line on a map. Countries that were physically right next to each other were economically worlds apart. Just think of North and South Korea.

I became convinced that the crucial difference between these countries wasn’t geography. I noticed the most successful countries shared something in common. They were the freest. They protected the rights of the individual. They enforced the rule of law. And they encouraged free enterprise. They understood that economic freedom is the only force in history that has consistently lifted people out of poverty – and kept people out of poverty.

I guess someone on his staff read a synopsis of Acemoglu and Robinson.

David Axelrod, a senior campaign adviser for President Barack Obama’s reelection campaign, trash-talked Mitt Romney on Sunday, calling last week’s Republican National Convention “a terrible failure” and claiming Romney did not receive a polling bounce.

Presidential campaign staff are always saying stuff like that. How badly the other side is doing. Promoting polls that show their own candidate doing well and dissing polls that don’t. While that seems like natural fighting spirit, from the strategic point of view this is sometimes questionable strategy.

If you had the power to implant arbitrary expectations into the minds of your supporters and those of your rival, what would they be?

- You wouldn’t want your supporters to think that your candidate was very likely to lose.

- But neither would you want your supporters to think that your candidate was very likely to win.

- Instead you want your supporters to believe that the race is very close.

- But you want to plant the opposite beliefs in the mind of the opposition. You want them to think that the race is already decided. It probably doesn’t matter which way.

All of this because you want to motivate your supporters and lure the opposition into complacency. If you are David Axelrod and your candidate has a lead in the polls and you can’t just conjure up arbitrary expectations but you can nudge your supporter’s mood one way or the other you want to play up the opposition not denigrate them.

Unless its only the opposition that is paying attention. Indeed suppose that campaign staffers know that the audience that is paying closest attention to their public statements is the opposition. Then right now we would expect to be hearing Democrats saying they are winning and Republicans saying their own campaign is in disarray.

David Levine’s essay is all grown up and now a full-blown book. His goal is to “set the record straight” and document the true successes and failures of economic theory. Here is a choice passage:

One of the most frustrating experiences for a working economist is to be confronted by a psychologist, political scientist – or even in some cases Nobel Prize winning economist – to be told in no uncertain terms “Your theory does not explain X – but X happens in the real world, so your theory is wrong.” The frustration revolves around the fact that the theory does predict X and you personally published a paper in a major journal showing exactly that. One cannot intelligently criticize – no matter what one’s credentials – what one does not understand. We have just seen that standard mainstream economic theory explains a lot of things quite well. Before examining criticisms of the theory more closely it would be wise to invest a little time in understanding what the theory does and does not say.

The point is that the theory of “rational play” does not say what you probably think it says. At first glance, it is common to call the behavior of suicide bombers crazy or irrational – as for example in the Sharkansky quotation at the beginning of the chapter. But according to economics it is probably not. From an economic perspective suicide need not be irrational: indeed a famous unpublished 2004 paper by Nobel Prize winning economist Gary Becker and U.S. Appeals Court Judge Richard Posner called “Suicide: An Economic Approach” studies exactly when it would be rational to commit suicide.

The evidence about the rationality of suicide is persuasive. For example, in the State of Oregon, suicide is legal. It cannot, however, be legally done in an impulsive fashion: it requires two oral requests separated by at least 15 days plus a written request signed in the presence of two witnesses, at least one of whom is not related to the applicant. While the exact number of people committing suicide under these terms is not known, it is substantial. Hence – from an economic perspective – this behavior is rational because it represents a clearly expressed preference.

What does this have to do with suicide bombers? If it is rational to commit suicide, then it is surely rational to achieve a worthwhile goal in the process. Eliminating ones enemies is – from the perspective of economics – a rational goal. Moreover, modern research into suicide bombers (see Kix [2010]) shows that they exhibit exactly the same characteristics of isolation and depression that leads in many cases to suicide without bombing. That is: leaning to committing suicide they rationally choose to take their enemies with them.

The book is published as an e-Book by the Open Book Publishers. You can download a PDF for a nominal fee or even read it for free on the website. Here’s more from David, writing about Kahnemann’s Thinking Fast and Slow.

The two political parties hold conventions to nominate their Presidential candidate. These are huge affairs requiring large blocks of hotel space and a venue, usually something the size of a Basketball stadium. That all requires a lot of advance planning and therefore a commitment to a date.

Presumably there is an advantage to either going first or second. It may be that first impressions matter the most and so going first is desirable. Or it may be that people remember the most recent convention more vividly so that going second is better. Whichever it is, the incumbent party has an advantage in the convention timing game.

The incumbent party already has a nominee. The convention doesn’t accomplish anything formal and is really just an opportunity to advertise its candidate and platform. The challenger, by contrast, has to hold the convention in order to formally nominate its candidate. This is not just to make formal what has usually been decided much earlier in the primaries. Federal law releases the candidate from using some campaign money only after he is formally nominated.

So the incumbent has the freedom to wait as long as necessary for the challenger to commit to a date and then immediately respond by scheduling its own convention either directly before or directly after the challenger’s, depending on which is more desirable. The fact that this year the Democractic National Convention followed immediately on the heel’s of the Republican’s suggests that going last is better.

I haven’t seen the data but this theory makes the following prediction. In every election with an incumbent candidate the incumbent party’s convention is either always before or always after the challenger’s. And in elections with no incumbent, the sequencing is unpredictable just on the basis of party.

From a design point of view with lots of beautiful pictures, like this one:

This part was news to me:

The man to finally surpass the two-bar brewing barrier was Milanese café owner Achille Gaggia. Gaggia transformed the Jules Verne hood ornament into a chromed-out counter-top spaceship with the invention of the lever-driven machine. In Gaggia’s machine, invented after World War II, steam pressure in the boiler forces the water into a cylinder where it is further pressurized by a spring-piston lever operated by the barista. Not only did this obviate the need for massive boilers, but it also drastically increased the water pressure from 1.5-2 bars to 8-10 bars. The lever machines also standardized the size of the espresso. The cylinder on lever groups could only hold an ounce of water, limiting the volume that could be used to prepare an espresso. With the lever machines also came some some new jargon: baristas operating Gaggia’s spring-loaded levers coined the term “pulling a shot” of espresso. But perhaps most importantly, with the invention of the high-pressure lever machine came the discovery ofcrema – the foam floating over the coffee liquid that is the defining characteristic of a quality espresso. A historical anecdote claims that early consumers were dubious of this “scum” floating over their coffee until Gaggia began referring to it as “caffe creme“, suggesting that the coffee was of such quality that it produced its own creme. With high pressure and golden crema, Gaggia’s lever machine marks the birth of the contemporary espresso.

By the way this is the 2000th Cheap Talk post.

Micropayments haven’t materialized. My guess is that’s because of a combination of two reasons. First, there are the technological/network externality barriers. Nobody as of yet has put forth a system for micropayments that is easy and compelling enough to spur widespread adoption.

The second reason is that micropayments may not actually be the most efficient way to achieve their purpose. A monetary payment is a one-to-one transfer of value from payor to payee. Right now many of the online transactions that micropayments would facilitate are actually financed with a more efficient means of payment. Advertisements are the best example. You want to watch a video on YouTube, you have to watch a little bit of an ad first.

This is a transfer of value: you lose some time, the advertiser gains your attention. But this transfer is not one-for-one because your opportunity cost of time is not identically equal to the value to the advertiser of your attention. And given the widespread use of advertisements in markets where monetary payments are possible, we can infer that this transfer is actually positive-sum. That is, the cost of your time is lower than the value of capturing your attention.

Microbarter is more efficient than micropayment. So we should expect to see even more of it. And we should expect that even more efficient forms of microbarter will appear. And indeed we soon will. Google has apparently figured out that information can be an even more efficient currency than attention:

Eighteen months ago — under non disclosure — Google showed publishers a new transaction system for inexpensive products such as newspaper articles. It worked like this: to gain access to a web site, the user is asked to participate to a short consumer research session. A single question, a set of images leading to a quick choice.

Once you think in terms of microbarter and positive-sum transactions there are probably many more ideas you could come up with. But a few questions too. Why is there not already a market which enables you to sell your valuable asset (attention, information etc) for money? After all, if it could be monetized and the market is competitive then the usual arguments will imply that at the margin the exchange will be zero-sum and the rationale for barter disappears.

(Ghutrah grip: Mallesh Pai)

In a meeting a guy’s phone goes off because he just received a text and he forgot to silence it. What kind of guy is he?

- He’s the type who is a slave to his smartphone, constantly texting and receiving texts. Statistically this must be true because conditional on someone receiving a text it is most likely the guy whose arrival rate of texts is the highest.

- He’s the type who rarely uses his phone for texting and this is the first text he has received in weeks. Statistically this must be true because conditional on someone forgetting to silence his phone it is most likely the guy whose arrival rate of texts is the lowest.